FSD tried to kill me again. Yes, really. Let me explain.

If you’ve driven with Tesla’s Full Self-Driving (FSD) lately, or even just Autopilot, you’ve likely encountered some quirks. But on November 5th, my experience with FSD crossed a line from minor inconveniences to serious safety issues. Despite being a tech enthusiast who supports autonomous driving, this latest experience pushed my confidence in FSD to a new low.

Tuesday Afternoon with FSD: Promising Improvements

After a long trip, I was ready to put FSD to the test on a 70-mile drive back from an appointment at Stanford. The traffic was a little lighter than usual for Silicon Valley rush hour, likely due to it being Election Day. Still, there were pockets of stop-and-go congestion, making it a good chance to see how FSD had improved since my last trial in June.

Surprisingly, I did notice progress:

Improved Lane Positioning: No more hugging the right side in merging zones.

Motorcycle Awareness: FSD now moves over for motorcycles, which is a big step forward, especially in California, where motorcycles legally “white line.”

"Chill Mode" Lane Etiquette: I adjusted FSD to minimize lane changes, and the system respected it, making fewer risky maneuvers. When I wanted to switch lanes, I simply signaled, and the car took the hint.

For the first hour, I felt optimistic.

The Incident: An Unexpected Veer off the Road

But then, as we approached Good Samaritan Hospital on Highway 101 Southbound, FSD took a terrifying turn—literally. Out of nowhere, the car suddenly veered sharply toward the shoulder. I was in the carpool lane, thankfully, so there was no guardrail, just open space. I immediately took control, “tapping out” of FSD, and steered back onto the highway. But the shock of losing control lingered.

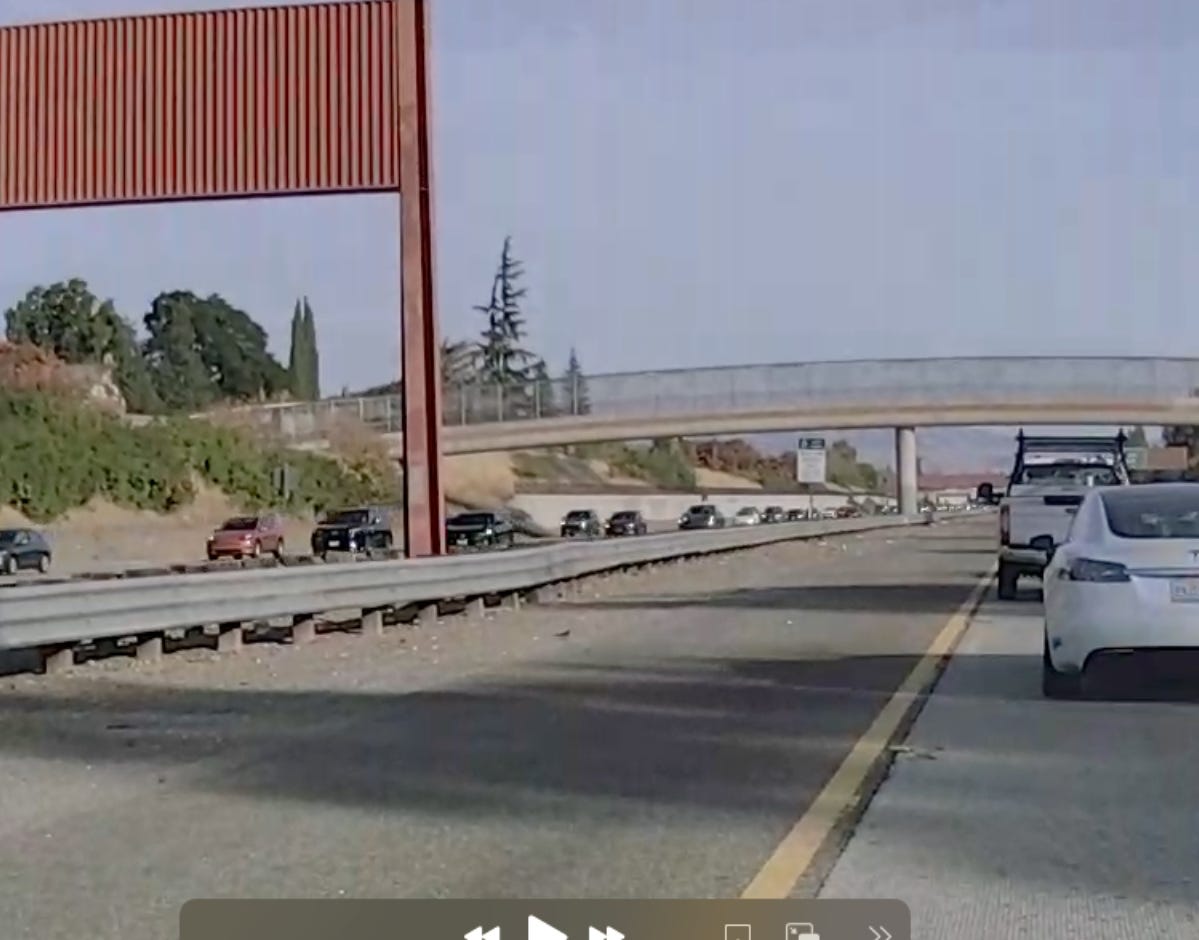

Had something triggered this maneuver? Perhaps a motorcyclist? Or a car creeping too close in the next lane? I scanned the dashcam footage at home to check. But no, nothing was there—no encroaching driver, no near-invisible motorcycle. FSD had apparently made this maneuver on its own.

Caption: This is a screencap from my back camera. No motorcyclist. No car approaching too close. No one drifting into my lane. Everyone was driving soooo nicely.

The driver behind me must have thought I was a drunk driver, and in a way, they weren’t entirely wrong. I had no control of the car for those few seconds, and that realization was sobering.

I've "tapped" in and out of AutoPilot hundreds of times, so I can do it very quickly. It always feels a little harder wrestling control out of Full Self-Driving- as if the car doesn't want to let go of the wheel. But, in this video, it looks very seamless as I get back on the road. What you are not seeing, is the shock on my face, or hearing the beating of my heart. Or, hearing me yelling: "What the f$##@!!?" I don't often curse, but when I do, it is memorable.

Community Experiences and Reporting Issues

I shared the experience with the women in my Tesla Divas group on Facebook. Several other women repeated incidents with their cars during the current FSD free trial. My husband forwarded me a screenshot that same day from his Model Y owners group with a story very similar to mine.

Why is this happening? Is FSD simply not yet reliable enough to make decisions autonomously?

Tesla’s Safety Data and the Need for Greater Transparency

Tesla’s Vehicle Safety Report claims that cars driven with Autopilot engaged have a lower crash rate per mile compared to those driven without it. For instance, in Q3 2024, Tesla reported one crash for every 7.08 million miles with Autopilot, versus one crash per 1.29 million miles without it. While these numbers imply that Autopilot enhances safety, there’s a lack of transparency in how Tesla presents this data.

One significant point is that Tesla combines Autopilot and Full Self-Driving (FSD) statistics, even though FSD is an optional, more advanced system intended for complex driving environments beyond highways, where the risks and challenges are considerably different. By not separating these statistics, Tesla leaves drivers without a clear understanding of how FSD performs relative to standard Autopilot. Additionally, the data does not specify the types of roads or conditions under which crashes occurred, factors that can substantially affect safety outcomes. Greater clarity on these distinctions would provide consumers with a more realistic view of FSD’s current safety profile, allowing them to make better-informed decisions about this advanced (and still evolving) technology.

Fatal Crash Highlights FSD’s Safety Challenges

A recent fatality involving a pedestrian has brought serious attention to Tesla's Full Self-Driving (FSD) technology. According to a Verge report, the National Highway Traffic Safety Administration (NHTSA) is investigating multiple incidents involving FSD, including the recent pedestrian death. This investigation raises questions about Tesla’s approach to autonomous technology, especially since Tesla CEO Elon Musk has been vocal about his opposition to using LIDAR—a technology that many in the autonomous vehicle industry view as essential for detecting obstacles in a variety of lighting and weather conditions. Musk has said, “LIDAR is a fool’s errand,” believing Tesla’s camera-based vision system is sufficient. However, these incidents suggest that the FSD system may struggle in certain scenarios where LIDAR could potentially enhance object detection and safety. As the investigation unfolds, it may further underscore the need for comprehensive sensor systems that can reliably prevent tragic incidents like this one.

Could Sun Glare Be FSD’s Achilles’ Heel?

As I reflected on the sudden swerve toward the shoulder, I began to wonder: Could sun glare have been the hidden culprit? According to recent data, Tesla’s Full Self-Driving system has shown vulnerabilities in certain lighting conditions, especially bright sunlight and glare, which can distort the camera-based vision system.

The National Highway Traffic Safety Administration (NHTSA) has also taken notice. In a recent probe involving 2.4 million Tesla vehicles, NHTSA investigators are examining cases where FSD may have malfunctioned under challenging visibility conditions. This includes sun glare, which has contributed to multiple crashes. In fact, NHTSA is focusing on FSD crashes where lighting conditions are suspected to have interfered with the car’s ability to “see” clearly, leading to unexpected and sometimes fatal outcomes.

As a Tesla driver, these reports raise serious concerns: Could sun glare—something every human driver knows to handle with caution—actually be an Achilles' heel for FSD? Given the high stakes of autonomous driving, even a common variable like sunlight could undermine FSD's reliability in ways Tesla must address.

Challenges & The Path Forward

Perfecting Full Self-Driving (FSD) is a significant task, and Tesla must address several critical areas to improve user trust and road safety.

Enhanced Edge Case Handling: FSD needs to master rare but essential driving scenarios, such as complicated highway merges or crowded city traffic, that it currently struggles with. Handling these "edge cases" reliably is essential for any vehicle aiming for full autonomy.

Clearer Real-Time Feedback to Drivers: When FSD makes unexpected moves, drivers are left wondering why the car made the decision it did. Tesla could improve transparency by providing a brief, real-time explanation for actions like sudden lane changes or erratic steering, helping drivers understand if the car detected a threat or made a minor error.

Transparent Incident Reporting with User Feedback: Tesla’s “report bug” feature is a vital tool, enabling drivers to alert Tesla to issues in real-time. However, many drivers—myself included—report that this feedback goes into a "black hole," with no acknowledgment or follow-up from Tesla.

Final Thoughts: The Path to True Autonomy

Tesla’s ambition to achieve full autonomy with just cameras, AI, and software has captured the public’s imagination, but my experience—and mounting evidence—suggest it may be fatally flawed. Unlike Waymo and other companies that have invested in hardware-based solutions like LIDAR and radar to ensure accurate, reliable sensing of the environment, Tesla’s FSD is attempting to interpret the world through cameras alone. This “vision-only” approach overlooks a fundamental truth: cameras, no matter how sophisticated, struggle in conditions like sun glare, fog, or low-light situations that human drivers—and more robust hardware—can handle more adeptly.

To put it bluntly, a fully autonomous Tesla without LIDAR and radar seems destined to remain a vision, not a reality. Elon Musk’s stance against these additional sensors, dismissing LIDAR as “a fool’s errand,” raises serious questions about the risks he’s willing to accept for Tesla drivers. How many Teslas need to veer off the road, or worse, before he reconsiders? Autonomy requires more than intelligence; it requires real-world adaptability, and that demands a comprehensive sensor suite, not just cameras. Until Tesla recognizes this, the promise of a safe, autonomous Tesla on every street will remain out of reach.

A Tesla Owner, a Skeptic, and a Writer on Autonomy’s Unfinished Journey.

Stay Curious. Stay Informed. #DeepLearningDaily.

Additional Resources for Inquisitive Minds

"U.S. Opens Probe into 2.4 Million Tesla Vehicles Over Full Self-Driving Collisions." Reuters, 18 Oct. 2024, www.reuters.com/business/autos-transportation/nhtsa-opens-probe-into-24-mln-tesla-vehicles-over-full-self-driving-collisions-2024-10-18/.

"NHTSA Probes Tesla Full Self-Driving Crashes in Reduced Visibility." The Verge, 18 Oct. 2024, www.theverge.com/2024/10/18/24273418/nhtsa-tesla-full-self-driving-investigation-crashes.

"Tesla's 'Full Self-Driving' Feature Under Scrutiny After Deadly Incidents." New York Post, 18 Oct. 2024, nypost.com/2024/10/18/business/nhtsa-to-investigate-elon-musks-tesla-after-several-full-self-driving-crashes/.

"Tesla Vehicle Safety Report." Tesla, www.tesla.com/VehicleSafetyReport. Accessed 11 Nov. 2024.

Vocabulary Key:

FSD (Full Self-Driving): Tesla's advanced driving software that allows the car to perform certain autonomous functions.

Autopilot: Tesla's earlier version of driver-assistance technology, which manages basic highway driving but isn't fully autonomous.

Dashcam Footage: Video recording from a camera on the car's dashboard that captures events during a drive.

Edge Case: Rare or unexpected situation that software might not handle well.

LIDAR: A technology that uses laser light to detect and measure objects; widely used in autonomous driving systems for enhanced accuracy in obstacle detection.

FAQ:

Has this kind of veering behavior been reported before? Yes, many Tesla drivers have reported similar incidents where FSD made unexpected lane shifts or veered toward the shoulder.

Why did FSD veer off the road? Sun glare and other environmental factors may be influencing FSD’s sensor performance, as highlighted in recent federal investigations.

Is FSD dangerous? When used in unpredictable ways, FSD can lead to unsafe situations, so it’s essential for drivers to remain attentive.

Why doesn’t Tesla distinguish between Autopilot and FSD in their safety data? Tesla’s reports combine the two systems, which obscures FSD’s specific safety profile and may not give an accurate picture of FSD’s performance.

Will FSD reach full autonomy soon? Experts and recent investigations suggest it may be years before FSD can operate without a human in the loop.

#TeslaFSD #AutonomousDriving #FullSelfDriving #ElonMusk #TeslaSafety #Autopilot #SelfDrivingCars #TechSkepticism #InnovationAndRisk #DrivingSafety #AI #MachineLearning #HumanInTheLoop #AutonomousVehicles #Robotaxi

Share this post