I considered writing about OpenAI's agents tonight, but I'm not convinced I need AI to order my groceries; agents will find their place, but as we end an interesting week for AI, I wanted to look at the bigger picture, namely, the future of humanity.

Mission-critical systems require oversight. That's been true since the first nuclear reactors came online, since the first commercial airlines took flight, since the first pharmaceutical companies developed life-saving drugs. But this week, as artificial intelligence—potentially the most transformative technology in human history—shed its federal oversight, a crucial question emerges: Who's watching the watchmen?

The Regulatory Vacuum

In a swift series of moves that transformed AI oversight in America, President Trump dismantled the federal safeguards put in place to monitor and regulate AI development. The U.S. AI Safety Institute's mandate to test and evaluate powerful AI systems before release? Gone. Requirements for companies to share safety test results? Eliminated. Federal guidelines for responsible AI development? Rescinded.

Into this void step two unlikely guardians: state governments and the very companies developing these powerful systems.

The New Guardians: Venture Capitalists and Tech Titans

The appointment of David Sacks as AI Czar adds another layer to this complex picture. A venture capitalist and PayPal co-founder alongside Elon Musk and Peter Thiel, Sacks brings what legal experts describe as a "pro-innovation, pro-startup" approach to AI oversight. But this appointment raises a fundamental question: Should venture capitalists, whose primary focus is return on investment, be entrusted with safeguarding humanity's interests in AI development?

The interconnected relationships here tell a fascinating story about power and oversight in the AI era. Sacks, Musk, and OpenAI's Sam Altman represent a small circle of tech elites now wielding enormous influence over AI's future. Musk's own journey exemplifies the contradictions at play: He co-founded OpenAI as a nonprofit to counter Google's AI dominance, warning about the dangers of for-profit companies controlling AI's future. Yet after leaving OpenAI in a dispute over control, he launched xAI—a for-profit company that, ironically, "will not have any guardrails against disinformation and hate speech."

Even Musk's role as a government advisor through the Department of Government Efficiency seems at odds with his past positions. (A “Department” that is not actually a “department,” and a name selected because he thought the acronym was funny.) His warnings about AI as an existential threat to humanity—which sparked his lawsuit against OpenAI's transition to a for-profit model—appear inconsistent with his current stance on deregulation. Much like FSD Beta in a roundabout, Musk's positions on AI safety veer off unpredictably.

These contradictions highlight a crucial problem: When venture capitalists become regulators, and AI critics become AI developers, who truly stands guard over humanity's interests?

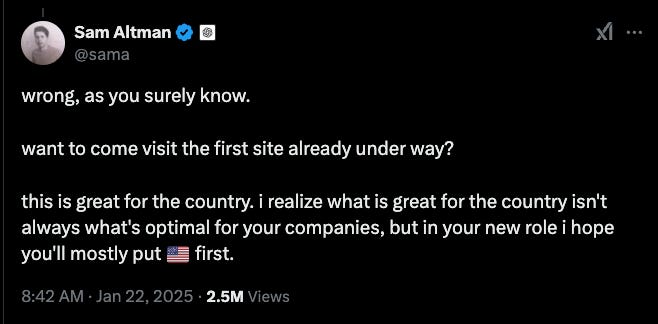

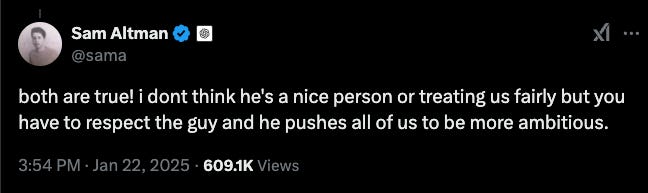

The tension played out publicly this week when Elon Musk, now a government advisor, criticized OpenAI's involvement in the $500 billion Stargate project. OpenAI's Sam Altman fired back: "i realize what is great for the country isn't always what's optimal for your companies, but in your new role i hope you'll mostly put 🇺🇸 first."

This exchange reveals the fundamental challenge: Those tasked with safeguarding humanity's interests are the same ones competing for market dominance.

And, these are some of the men who are now tasked to “watch over humanity” when it comes to AI.

“Benefits All of Humanity”

The major AI companies have built their identities around commitments to humanity's wellbeing. OpenAI's charter explicitly promises to ensure that artificial general intelligence "benefits all of humanity." Anthropic, describing itself as a public benefit and AI safety research company corporation, emphasizes building “safer systems.” Google DeepMind declares its mission to “Build AI responsibly to benefit humanity.” Elon Musk’s xAI seeks to “Understand the Universe.” (At the bottom of X’s mission page, there is a mention of safety. “Our team is advised by Dan Hendrycks who currently serves as the director of the Center for AI Safety.”)

Between Mission Statements And Today’s Reality

Anthropic develops "constitutional AI" to embed ethical principles directly into systems, while Google DeepMind commits to being "socially beneficial." But without external verification, who ensures these private guardrails are sufficient?

The gap between companies' idealistic missions and their practical actions raises a crucial question: Can we trust corporations to regulate themselves when profit motives may conflict with humanity's best interests?

State-Level Safeguards

In 2024 alone, nearly 700 AI-related bills were introduced across different states. While this shows widespread recognition of the need for oversight, it also creates a complex maze of regulations that companies must navigate. More importantly, can state-level regulations effectively govern a technology that knows no borders?

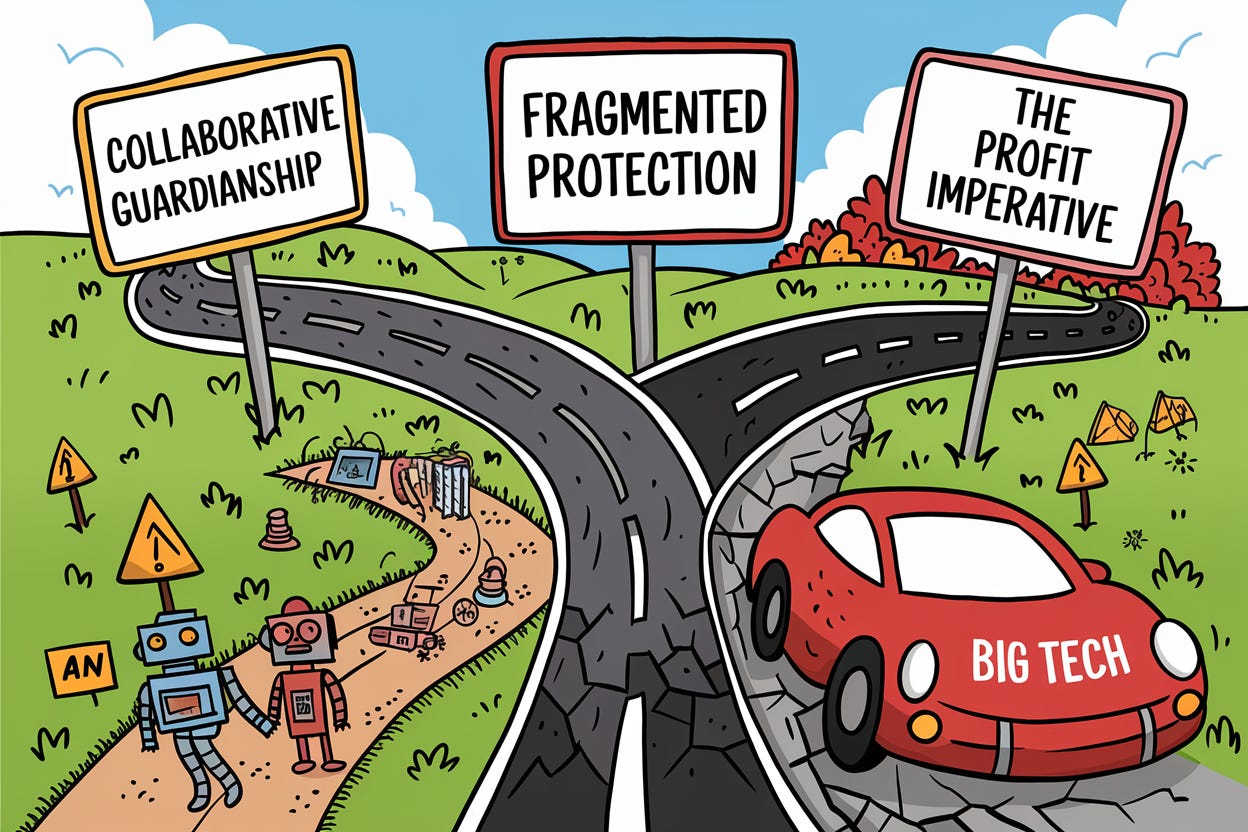

NOT Three Possible Futures

Best-Case Scenario: Collaborative Guardianship

Companies recognize that long-term profitability depends on maintaining humanity's trust. They establish rigorous self-regulation frameworks, while states provide baseline protections and accountability measures.

Most Likely Scenario: Fragmented Protection

Different states implement varying levels of oversight while large tech companies establish their own standards. Smaller companies struggle with compliance, while gaps in protection emerge at the intersections of different jurisdictional requirements.

Worst-Case Scenario: The Profit Imperative

Without federal oversight, competitive pressures drive companies to prioritize rapid development over safety. State regulations prove ineffective at controlling a technology that operates beyond geographical boundaries.

The Stakes

The dismantling of federal AI oversight isn't just a policy change—it's a fundamental shift in how we approach one of the most powerful technologies humanity has ever developed. The question isn't just academic. As AI systems grow more capable and autonomous, the guardrails we establish (or fail to establish) today will shape humanity's future.

Who safeguards humanity in this new era? The uncomfortable answer is: all of us, and therefore potentially no one. Companies must balance profit motives with ethical imperatives. States must coordinate to prevent regulatory arbitrage. And the public must remain vigilant, demanding transparency and accountability from both corporate and government actors.

The next few months will reveal whether this decentralized approach to AI oversight can effectively protect humanity's interests. The stakes couldn't be higher.

Key Terms and Concepts

AI Economic Zones- Proposed areas with streamlined permitting processes for AI infrastructure development, including data centers and energy facilities. OpenAI introduced this concept in their Economic Blueprint to avoid what they call a "regulatory patchwork."

Constitutional AI - An approach developed by Anthropic that aims to embed ethical principles directly into AI systems during their development, rather than applying restrictions after the fact.

Department of Government Efficiency (DOGE) - A new advisory body led by Elon Musk, tasked with reducing federal spending and streamlining government operations.

Project Stargate - A $500 billion AI infrastructure initiative announced by the Trump administration, involving partnerships between OpenAI, Oracle, and SoftBank to build data centers and other AI infrastructure in the United States.

U.S. AI Safety Institute (AISI) - The recently disbanded federal organization, formerly housed within the Department of Commerce, that was responsible for testing and evaluating powerful AI systems before their release.

Frequently Asked Questions

What exactly changed with federal AI oversight? The Biden administration's executive order requiring companies to share safety test results and submit to federal evaluation before releasing new AI models was rescinded. This effectively removed the main federal mechanism for monitoring AI development.

Why are state regulations a potential problem? With nearly 700 different AI-related bills introduced across states in 2024 alone, companies face a complex maze of varying requirements. This "regulatory patchwork" could make it difficult to develop and deploy AI systems consistently across state lines.

What's the significance of David Sacks' appointment? As AI Czar, Sacks brings a venture capitalist's perspective to AI oversight. His appointment signals a shift toward a more market-driven approach to AI regulation, prioritizing innovation and economic growth over government oversight.

How are AI companies regulating themselves? Companies are taking different approaches. Anthropic develops "constitutional AI" with built-in ethical principles. OpenAI advocates for economic zones and unified standards. Google DeepMind emphasizes social benefit in its development process. However, without external verification, the effectiveness of these self-imposed guardrails remains uncertain.

Why does the Musk-Altman exchange matter? Their public disagreement over Project Stargate highlights the fundamental tension in current AI oversight: those responsible for safeguarding humanity's interests often have competing business interests that might conflict with that responsibility.

What role do states play now? States have become the primary source of AI regulation in the U.S., with each state developing its own approach. This creates a complex regulatory environment where companies must navigate different requirements in different jurisdictions.

How does this affect smaller AI companies? Smaller companies may struggle to comply with varying state regulations and might find it harder to compete with larger companies that can more easily navigate the complex regulatory landscape.

What the WolfPack Is Reading:

PYMNTS. How AI Regulations May Change With New Trump White House. (January 20, 2025.)

ITI. Governments Must Seize This Pivotal Moment for International AI Safety Cooperation. (November 19, 2024.)

INDIATV. Elon Musk raises concerns on AI's potential risks: Warns of 10-20 per cent chance of ‘Going Bad’ (October 30, 2024.)

r/TeslaMotors. FSD Beta roundabout hell. Hardware- Full Self-Driving.

TechPolicy.Press. How Elon Musk’s Influence Could Shift US AI Regulation Under the Trump Administration.

Center for Strategic and International Studies. The AI Safety Institute International Network: Next Steps and Recommendations. (October 30, 2024.)

#airegulation #techpolicy #artificialintelligence #aigovernance #aisafety #aioversight #techethics #aifuture #humanitysfuture #opensourceai #aiguidelines #techpolicymatters #elonmusk #samaltman #muskaltmanfeud #aihumanity

Share this post