The term "world model" has become increasingly prominent in AI discussions, particularly following NVIDIA's announcement of Cosmos at CES 2025 and OpenAI's release of Sora. But what exactly constitutes a world model, and how does it differ from the large language models we've become familiar with? As we'll explore, the answer is evolving alongside the technology itself.

The Academic Foundation

The concept of world models has deep roots in artificial intelligence research. In the 1990s, Jürgen Schmidhuber proposed systems that could learn to predict and understand their environment. This foundational work was later expanded in the influential 2018 paper "World Models" by David Ha and Jürgen Schmidhuber, which explored how AI systems might develop compact representations of their experiences.

Evolving Understanding of World Models

Rather than adhering to a rigid definition, world models are better understood as existing on a spectrum of capabilities. Researchers have identified several key aspects that contribute to world modeling abilities:

Physical Understanding: The ability to grasp and predict physical dynamics

Interactive Learning: Capability to learn from and adapt through environmental interaction

State Representation: Methods for maintaining and updating internal models of the world

These aspects represent current thinking about important capabilities rather than fixed criteria, and our understanding continues to evolve as the field advances.

Commercial Implementations and Progress

Recent commercial implementations are making significant strides toward realizing aspects of world modeling capabilities. NVIDIA's Cosmos, trained on 20 million hours of video focused on physical dynamics, represents an ambitious attempt to create a foundation model that understands physical interactions. While it may not fully achieve all aspects of world modeling envisioned in academic research, it demonstrates meaningful progress in physical understanding and prediction.

Testing World Model Capabilities: A Systematic Analysis

Our systematic testing of OpenAI's Sora reveals interesting patterns in current world model capabilities. Through a series of controlled experiments designed to test physical understanding, we observed:

1. Strong Performance Areas:

Basic physical interactions (stacking objects, balance)

Single-agent activities (bicycle riding instruction)

Simple cause-and-effect relationships

2. Current Limitations:

Inconsistent physics enforcement across multiple attempts

Difficulties with fluid dynamics and complex material properties

Tendency to alternate between realistic physics and "movie-style" physics

The test: I tasked Claude with creating a test for Sora. Here is the prompt I gave Claude: “How can I test Sora to see whether it is a world model. What prompts could I use to test its "world model" abilities?”

Claude responded: “This is a fascinating question! Let's design some systematic tests to probe Sora's understanding of physical reality and causality. We can base these tests on the key requirements of true world models we discussed.”

The prompts designed tests designed by Claude were designed to test for:

Temporal consistency

Respect for natural laws

Physical accuracy

Watching someone cut a tomato with a dull knife is a cringe-worthy activity. However, Sora did better at this test than other videos of this type I’ve seen online. Our aspiring chef only had the knife pass through his hand once.

I’m in love with this cat and the graceful way it stalks back and forth in front of the couch. The only problem is the prompt was to walk behind the couch and emerge out the other side.

At first glance, I thought a “success.” The oranges are balanced and stable. But, then I noticed the fruitless movements of the human. Bang. Bang. Bang. Where does this orange go? It’s almost mesmerizing. Like a new form of fruity Tetris.

Real-World Applications and Limitations

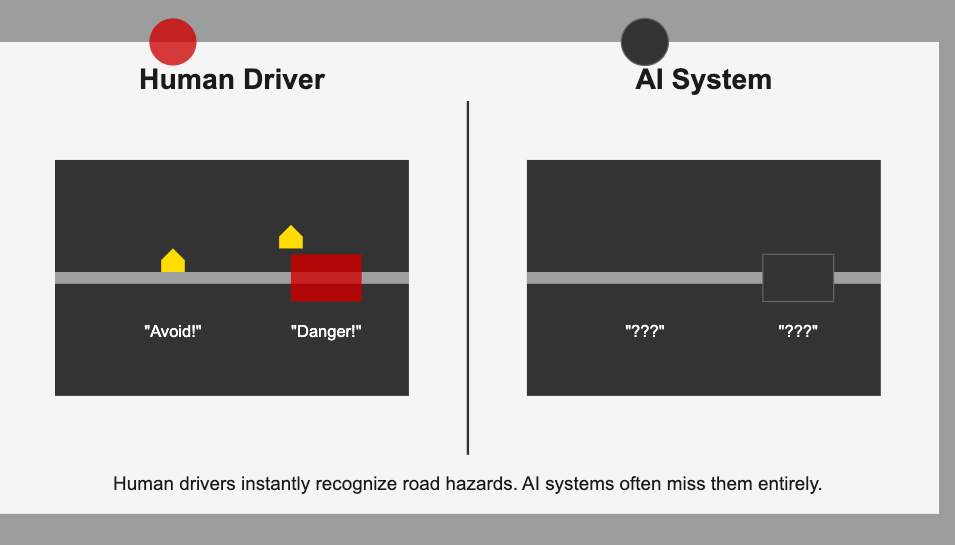

The gap between current capabilities and true world understanding becomes particularly evident in autonomous driving systems. Drawing from my two years of experience with Tesla's technology and extensive interaction with Tesla owner communities, I've observed a consistent pattern: while these systems handle standard driving scenarios well, they often struggle with situations that require intuitive understanding of the physical world.

For example, where human drivers instinctively recognize and avoid potholes to protect their vehicles, FSD systems often fail to identify these hazards, driving directly into them at full speed. In one particularly telling incident, my Tesla failed to detect a ladder lying in the middle of the highway – an obstacle that any human driver would immediately recognize as potentially catastrophic and requiring evasive action. I had to quickly disengage FSD and manually steer around it. Driving is something that is easy for humans to do, and hard for AI.

These cases highlight a crucial limitation: while current AI systems can process vast amounts of data and recognize patterns, they lack the intuitive physical understanding that humans use to immediately grasp the implications of objects in their environment. A human driver inherently understands why running over a ladder at highway speeds would be disastrous, or how a pothole might damage their vehicle. This kind of causal reasoning and physical understanding represents the gap between current pattern-matching systems and true world models.

And, as a treat for a future article, I’ll dig up that ladder footage from my dashcam -and several other examples like it- so we can see firsthand the types of things the onboard AI struggles with “seeing.” Until it understands these objects the way humans do, autonomous driving remains in the learning stage.

Key Challenges

The evolution toward more complete world models represents more than just technical progress; it's a fundamental shift in how AI systems understand and interact with reality. While current systems demonstrate impressive capabilities in specific domains, several key challenges remain:

1. Developing Consistent Physical Understanding:

Moving beyond pattern matching to true physical comprehension

Maintaining consistent physics across different scenarios

Handling novel situations not seen in training data

2. Bridging Simulation and Reality:

Transferring learning from simulated to real environments

Handling the complexity of real-world interactions

Developing robust predictive capabilities

The Road Ahead

The quest to create true world models is more than a technical challenge – it's about bridging the gap between AI's pattern recognition and human-like understanding of the physical world. Imagine AI systems that don't just process data but truly grasp the nature of reality: robots that intuitively understand how to handle delicate objects, autonomous vehicles that anticipate physical hazards as naturally as human drivers, and manufacturing systems that adapt to novel situations with human-like flexibility.

We stand at an interesting intersection. On one side, we have academic visions of AI systems that truly understand and interact with their environment. On the other, we have commercial implementations making remarkable yet limited progress. The tension between these two perspectives isn't holding us back – it's driving us forward, pushing the boundaries of what's possible.

Final Thoughts

The development of world models may prove to be one of the most profound advances in artificial intelligence. It's not just about making AI systems more capable; it's about creating AI that understands the world as we do. While today's systems may struggle with tasks that humans find intuitive, each limitation we discover points the way toward more sophisticated understanding.

As we've seen through our testing and analysis, we're still in the early chapters of this story. But the direction is clear: the future belongs to AI systems that don't just observe the world but truly understand it. The journey from pattern matching to genuine world understanding may be long, but it's a journey that could fundamentally transform our relationship with artificial intelligence.

Key Terms in World Model Discussion

Essential Vocabulary

World Model

Academic Definition: A system that can build and maintain an internal representation of how the physical world works and uses this understanding to predict and interact with its environment

Commercial Usage: AI systems that can generate or understand content while maintaining some level of physical consistency

Foundation Model

A large AI model trained on broad datasets that can be adapted for various specific tasks

Serves as a base for building more specialized applications

Pattern Matching

The ability to recognize and replicate patterns from training data

Different from true understanding as it may not grasp underlying principles

Physical Understanding

The ability to grasp and apply basic physics principles

Includes concepts like gravity, object permanence, and cause-effect relationships

Object Permanence

Understanding that objects continue to exist even when they can't be directly observed

Critical for real-world interaction and planning

Digital Twin

A virtual representation of a physical system

Used for testing and training AI systems in simulated environments

Edge Case

Unusual or rare situations that test the limits of an AI system's capabilities

Often reveals limitations in the system's understanding

Frequently Asked Questions

What's the difference between a language model and a world model? While language models primarily process and generate text based on patterns in language, world models aim to understand and predict physical reality. A language model might be able to describe how a ball bounces, but a world model should understand the physics that make it bounce.

Are current AI systems true world models? Current systems, including those marketed as world models, are better understood as sophisticated pattern matching systems that can maintain some physical consistency. They don't yet achieve the full vision of world models as conceived in academic research.

Why is it hard to create true world models? Creating true world models requires not just recognizing patterns but understanding fundamental principles of physics, causality, and object interaction. This understanding needs to be consistent across all situations, not just those seen in training data.

How are world models different from digital twins? Digital twins are virtual representations of specific physical systems, while world models aim to understand physical principles that apply universally. A digital twin might simulate a specific factory, while a world model should understand how factories work in general.

What role do world models play in robotics? World models are crucial for robotics as they help robots understand and interact with their environment. Better world models could lead to robots that can handle novel situations and adapt to changing conditions more naturally.

How can we test if something is a true world model? Testing involves challenging the system with novel situations that require physical understanding rather than pattern matching. Key tests include:

Physical law consistency

Object permanence

Causal understanding

Adaptation to novel situations

Handling of edge cases

What's the relationship between world models and artificial general intelligence (AGI)? While world models are not sufficient for AGI on their own, many researchers consider them a necessary component. True understanding of the physical world is likely essential for any system approaching human-like intelligence.

Terms Often Confused

World Model vs. Language Model

World Models: Focus on understanding physical reality

Language Models: Focus on processing and generating language

World Model vs. Digital Twin

World Models: General understanding of physical principles

Digital Twins: Specific simulation of particular systems

Pattern Matching vs. Understanding

Pattern Matching: Replicating seen behaviors

Understanding: Grasping underlying principles and applying them to new situations

What the WolfPack Is Watching:

This is the full keynote.

Memorable line from Jensen Huang: “This is a very kinetic keynote.”

This is the Cliff’s Notes version of the keynote.

What the WolfPack Is Reading:

NVIDIA Makes Cosmos World Foundation Models Openly Available to Physical AI Developer Community. State-of-the-art models trained on millions of hours of driving and robotics videos to democratize physical AI development, available under open model license. January 6, 2025.

Yanchen Guan et al. World Models for Autonomous Driving: An Initial Survey.

Hopkins, B. FORRESTER BLOG. LLMs, Make Room For World Models

THE ROBOT REPORT. NVIDIA unveils Omniverse upgrades, Cosmos foundation model, and more at CES. (January 7, 2025.)

Wiggers, Kyle. TechCrunch. What are AI ‘world models,’ and why do they matter? (December 14, 2024.)

#worldmodels, #artificialintelligence, #computerscience, #nvidia, #sora, #openai, #airesearch, #machinelearning, #robotics, #autonomousdriving, #futureofai, #physicsai, #edgecases, #aicapabilities, #cosmos, #selfdrivingcars, #aiinnovation, #deeplearning