Paper: Neural Turing Machines

Authors: Alex Graves, Greg Wayne, Ivo Danihelka

Date: 2014

Read the Original Paper: Neural Turing Machines (2014)

What This Paper is About

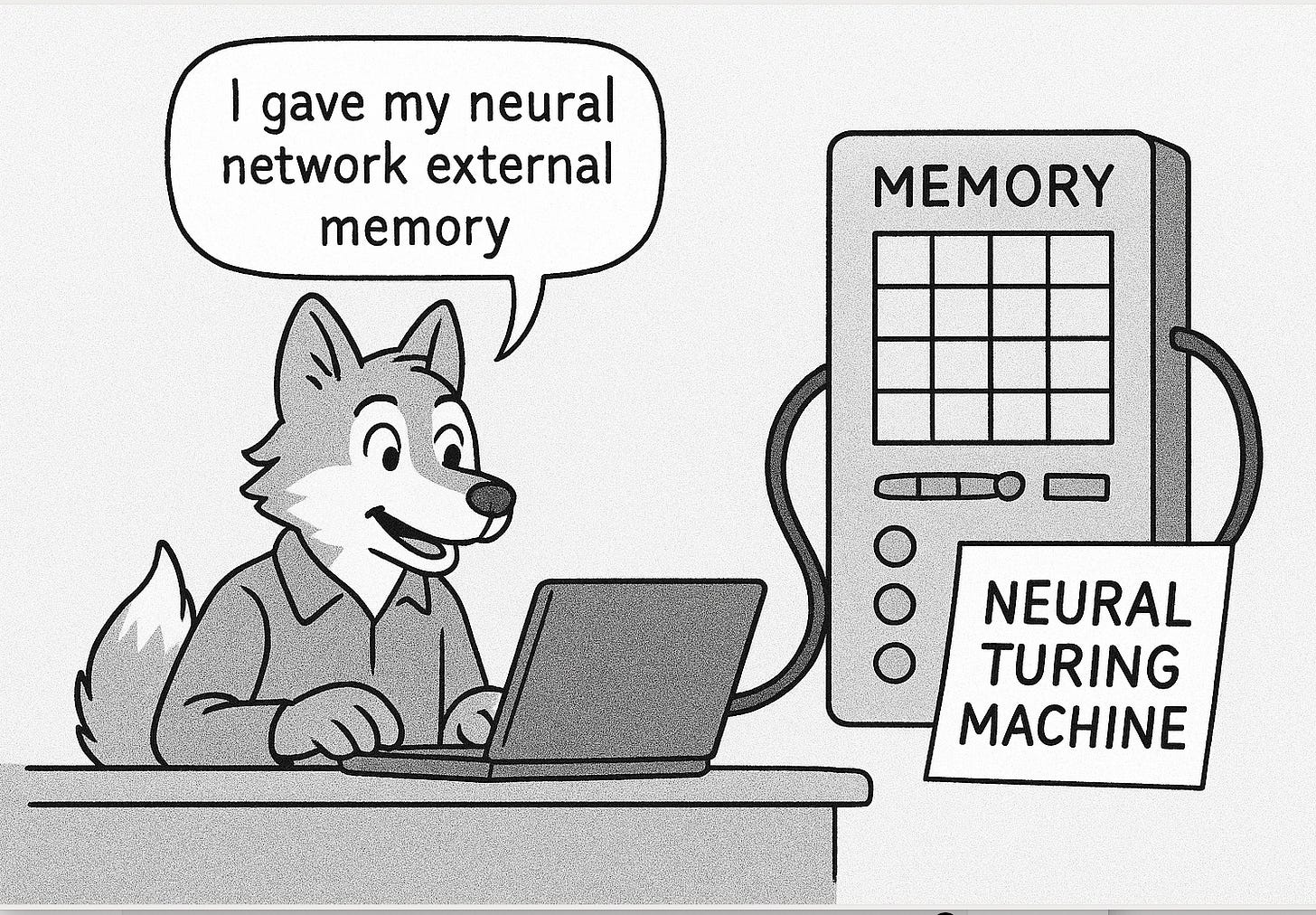

Before this paper, neural networks were like brilliant students with short-term memory loss—great at pattern recognition, terrible at recall. Neural Turing Machines (NTMs) proposed a hybrid system: a neural net controller connected to an external memory matrix, trained end-to-end using backpropagation.

The result? A model that could learn simple algorithms like copying, sorting, and associative recall—concepts traditionally out of reach for standard RNNs or LSTMs.

Why It Still Matters

NTMs introduced the concept of differentiable memory—the ability to learn how to store and retrieve information in an external memory bank. Though they were tested only on synthetic tasks (like copying random binary vectors), the foundational ideas went on to inspire later architectures like:

Differentiable Neural Computers (DNCs)

Memory Networks

Retrieval-Augmented Transformers

Even if modern LLMs like GPT-4 don’t use NTMs directly, the underlying idea—trainable memory systems—echoes in everything from in-context learning to RAG pipelines.

How It Works

An NTM has two core parts:

A controller, typically a neural net (like an LSTM), that decides what to do

A memory matrix, a grid where the controller can read and write

Instead of fixed memory access, NTMs use soft attention mechanisms to determine which memory locations to interact with—making the entire system differentiable and trainable.

But it wasn’t all smooth sailing:

NTMs struggled with very long sequences—performance dropped sharply with input lengths above ~120 steps.

Early implementations suffered from stability issues like exploding gradients or NaNs, which slowed down real-world adoption.

Still, the promise was clear: you could teach a neural net to learn an algorithm rather than program it directly.

Why It Blew People’s Minds

NTMs could learn algorithms. In the paper, they learned tasks like:

Copying sequences

Sorting numbers

Associative recall (finding an item based on partial clues)

These are simple tasks for humans or traditional code—but astonishing for a neural net to learn purely from data, without being programmed.

Memorable Quote from the Paper

“Our intention is to blend the fuzzy pattern recognition capabilities of neural networks with the algorithmic power of programmable computers.”

Podcast Note

Today’s episode was created with the help of Google NotebookLM and features two very synthetic voices trying to explain how memory-augmented networks changed the AI game. Enjoy the banter—no RAM required.

Editor’s Note

While NTMs themselves were more proof-of-concept than production-ready, their conceptual legacy is massive. They reintroduced the idea that deep learning doesn’t just need more neurons—it needs tools, like memory, planning, and structure.

Coming Tomorrow

📦 Meta-learning with MAML — the paper that taught models how to learn faster.

#NeuralTuringMachine #MetaLearning #DifferentiableMemory #AIHistory #WolfReadsAI #DeepMind #MemoryAugmentedNetworks #AlgorithmLearning

Share this post