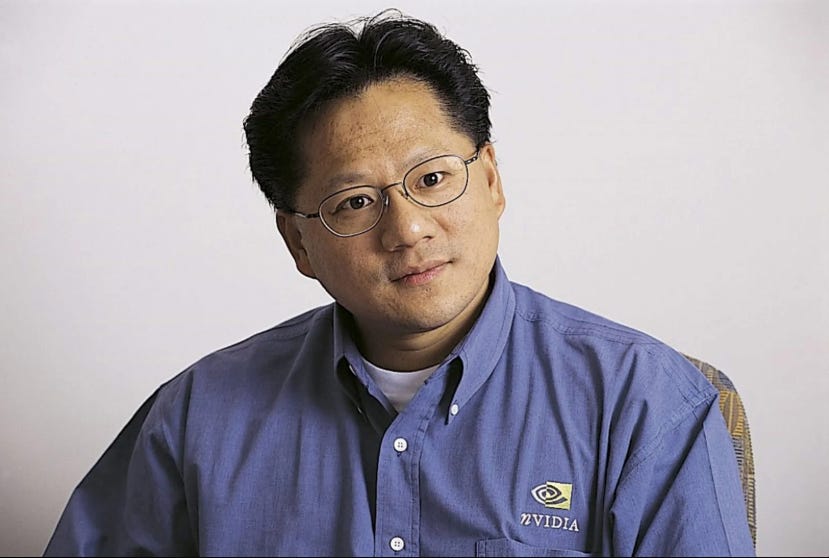

As the lights dimmed in Paris’s grand Dôme de Paris on June 11, 2025, Jensen Huang appeared under a cascade of laser beams and floating GPU icons. “This is the era of AI factories,” he declared, unveiling banks of Grace Blackwell NVL72 systems humming like futuristic beehives. In that moment, the once-modest gaming-chip architect became the conductor of a global intelligence infrastructure—a fitting culmination to a journey that began with toilet brushes and coffee cups.

From Reform School Janitor to Systems Thinker

At age nine, a family mix-up sent Huang not to the elite boarding school they’d intended, but to Oneida Baptist Institute in rural Kentucky. There, every morning began with the same ritual: scrubbing toilets until they gleamed. Rather than grumble, Huang treated the chore as an experiment. He mapped cleaning routes, timed each swipe, and set self-imposed quality targets. “I was probably the best toilet cleaner they ever had,” he later joked. That early taste of process optimization would echo through every boardroom he led.

Denny’s Dishwasher to Data-Center Dynamo

By fifteen, Huang balanced high school classes with a night-shift at Denny’s. As dishwasher, busboy, and waiter, he approached each task like an engineer optimizing a pipeline: How many coffee cups could he carry? What’s the shortest walking path between tables? He sketched floor plans in pencil, logged service times, and shaved seconds off every delivery. Those experiments weren’t mere fast-food anecdotes—they were the prototype for tomorrow’s GPU load balancing under NVLink.

The Scholar’s Ledger: Frugality and Credentials

When tuition bills loomed, Huang chose Oregon State University to keep costs low and earned his BS in electrical engineering without breaking the bank. Later, he enrolled part-time at Stanford, juggling courses with industry R&D. Over seven years, he added a master’s degree without pausing his career—an exercise in resource arbitrage that presaged NVIDIA’s lean approach to capital allocation.

Planting the Platform Seed: Founding NVIDIA

Huang founded Nvidia Corporation after meeting with Chris Malachowsky and Curtis Priem at an East San Jose Denny’s. If you’re in the mood for both GPU history and a stack of pancakes, this Denny’s is still there - 2484 Berryessa Road.

The “Accident” That Redefined Computing

Between 2006 and 2012, research labs discovered that NVIDIA GPUs—designed for games—excelled at neural-network training. Huang embraced this pattern recognition, launching CUDA, a parallel-computing framework that let scientists harness thousands of GPU cores. What began as a serendipitous side benefit became a deliberate AI-platform strategy:

Market Expansion: Gaming → High-Performance Computing → AI/ML

Product Evolution: Graphics chips → Compute accelerators → End-to-end AI platforms

Value Leap: 10× addressable market growth without rewriting core IP

2025 in Paris: AI Factories & Continental Sovereignty

At VivaTech 2025, Huang unveiled NVIDIA’s blueprint for “AI factories”—modular clusters of Grace Blackwell NVL72 racks, each housing 72 Blackwell GPUs linked via NVLink to achieve over 100 TB/s of internal bandwidth. These factories are designed not just for scale, but for efficiency: every watt, every millimeter of rack space, tuned to maximum throughput.

He also urged Europe to take control of its compute destiny. Within days, the European Commission’s InvestAI program—already funded at €20 billion—was positioned to finance four continental AI gigafactories. The U.K. government reaffirmed a £2 billion AI investment package (including £1 billion for national compute infrastructure) in its Spring AI Review. And although Macron and Scholz did not speak directly at VivaTech, their joint statements in the conference’s aftermath underscored the geopolitical stakes of digital autonomy in an age of shifting tech power.

Speculative Horizons: Rubin, Feynman, & Global AI Grids

Looking past Blackwell, Huang offered a glimpse of NVIDIA’s future roadmap:

Rubin (2026): A hybrid GPU+CPU on TSMC’s 3 nm node with HBM4, projected to top 50 petaflops on 4-bit AI workloads.

Feynman (2028): Named for the visionary physicist, this chip will unify next-gen HBM with ultra-dense compute—primed for edge-to-cloud deployments.

Envision AI factories powered by wind turbines in Nordic fjords, robotics labs in Germany running digital-twin simulations, and genomic-analysis clouds in Brazil—each built on the same NVIDIA foundation, yet customized to local needs.

Looking Ahead

Jensen Huang’s odyssey—from Taiwanese immigrant and reform-school janitor to the mastermind of a worldwide AI-infrastructure network—reveals the power of operational grit married to strategic eyesight. He transformed the discipline of cleaning toilets and optimizing coffee service into the DNA of NVIDIA’s platform play. As AI factories come online around the globe, they carry forward his core lesson: no task is too small, no detail too trivial, when you’re engineering the future of intelligence.

Podcast Note:

This episode was produced with Notebook LM and features AI podcasters. During a transcript review, one funding figure was mistakenly cited in U.S. dollars instead of euros; the correct commitments are €20 billion for the InvestAI program and £1 billion for U.K. national compute (part of a broader £2 billion AI package).

Vocabulary Key

Platform Strategy: Designing a core technology so third parties can build complementary products, creating network effects.

Sovereign AI: Nationally governed AI infrastructure to protect cultural and data autonomy.

NVLink: High-speed interconnect that lets GPUs share data at terabyte-scale per second.

Petaflop: One quadrillion floating-point operations per second—an AI compute throughput benchmark.

Token: A discrete unit of text or data processed by modern language models.

FAQs

Why did NVIDIA GPUs outperform CPUs for AI? Their thousands of parallel cores and high memory bandwidth match neural-network workloads more closely than CPUs’ sequential architectures.

What is an “AI factory”? A purpose-built compute cluster optimized end-to-end for training and serving large-scale AI models.

How does sovereign AI differ from cloud AI? Sovereign AI emphasizes national ownership and governance of data and compute, rather than reliance on global hyperscalers.

What role does CUDA play? CUDA is NVIDIA’s parallel-programming platform, enabling developers to accelerate diverse applications on GPUs.

#aiinfrastructure #nvidia #gtcparis #deeptech #platformstrategy #vivatech #sovereignai #nvlink #gpu #mlops #futureofwork #nvidia #jensenhuang #deeplearning #deeplearningwiththewolf

Share this post