The Pentagon's Use of AI: From World War II to Modern Defense

Yesterday morning, I made a mountain of sweet, chewy, purple mochi pancakes for Father's Day. They're fun to make, and even more to eat, since you can pull the finished pancakes apart with your hands in true mochi style. As delightful as the whole process is, making a stack of them takes a long time. So, I used this cooking time to catch up on my AI podcasts. I heard a little snippet about OpenAI and the US Military working together.

This information piqued my interest, so I did some research to ensure the accuracy of what I heard. Indeed, back in January, OpenAI altered its policies to permit work with the U.S. military. Initially, OpenAI's policies explicitly banned the use of its technologies, including ChatGPT, for military and warfare purposes. However, OpenAI removed these prohibitions and opened the door for collaborations with the Department of Defense (DoD).

Despite this collaboration, OpenAI maintains a cautious stance regarding the deployment of ChatGPT for direct military applications. OpenAI stresses that its product is not meant to “harm people, develop weapons, for communications surveillance, or to injure others or destroy property.” The company emphasizes the importance of using AI responsibly and addressing potential risks such as disinformation and malicious uses. (OpenAI/Safety)

“AI systems are becoming a part of everyday life. The key is to ensure that these machines are aligned with human intentions and values.”-Mira Murati, Chief Technology Officer at OpenAI

In May 2024, OpenAI published a blog post entitled: "Reimagining secure infrastructure for advanced AI." OpenAI called for an evolution in infrastructure security to protect advanced AI systems from sophisticated cyber threats. As AI becomes increasingly strategic and sought after, the online availability of model weights presents a target for hackers. Conventional security controls can enable robust defenses, but new approaches are needed to maximize protection while ensuring availability.

OpenAI proposes six security measures to complement existing cybersecurity best practices and protect advanced AI: trusted computing for AI accelerators, network and tenant isolation guarantees, innovation in operational and physical security for datacenters, AI-specific audit and compliance programs, AI for cyber defense, and resilience, redundancy, and research. These measures require investment, collaboration, and commitment from the AI and security communities to develop and protect advanced AI systems against ever-increasing threats.

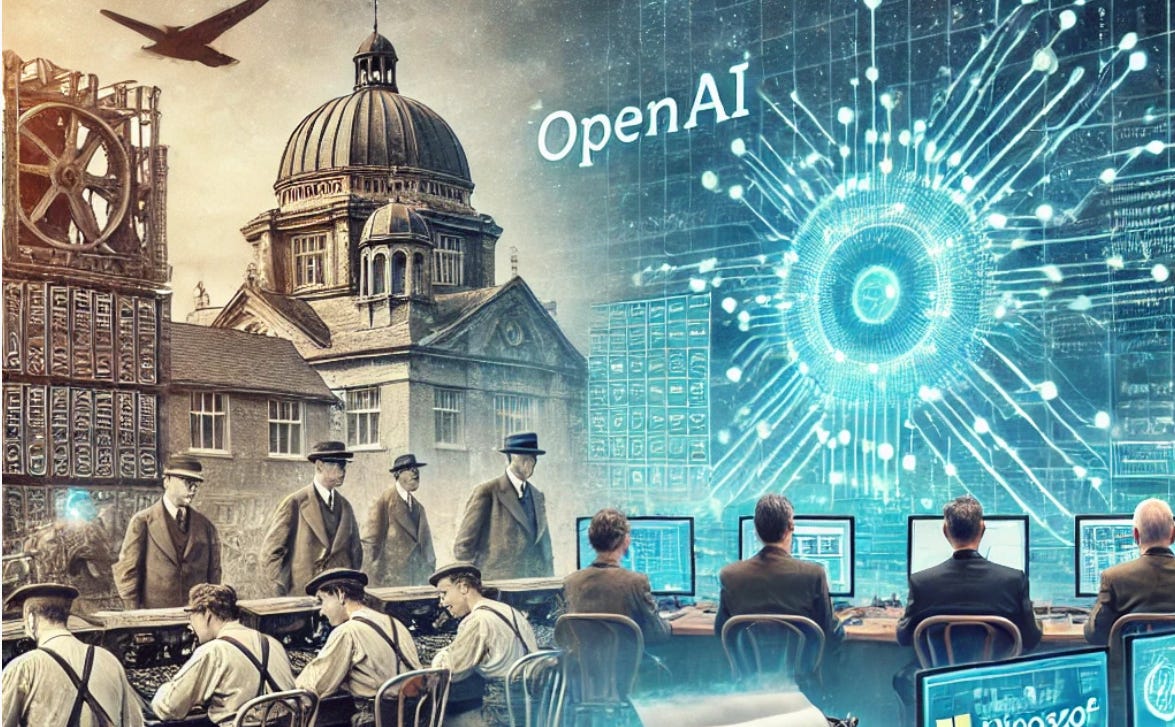

As I flipped and stirred, and the smell of sweet ube filled the kitchen, this got me to thinking about "the Enigma machine." The parallels between the wartime efforts at Bletchley Park and today's AI advancements are fascinating. Just as the Allies used early computers to crack the Enigma code and gain a strategic advantage, modern AI technologies are poised to transform defense strategies, underscoring the continuous evolution of technology in ensuring national security.

The First AI Safety Summit at Bletchley Park

Interestingly, Bletchley Park continues to be a significant site for technological advancements and discussions. In November 2023, it hosted the world’s first AI Safety Summit. This historic event brought together leading AI nations, technology companies, researchers, and civil society groups to discuss the safe and responsible development of AI. The summit aimed to address the ethical and safety challenges posed by AI technologies and foster international collaboration to ensure these technologies are used for the greater good.

Comparing Historical and Modern Technologies

While the early computers used during World War II, such as the Bombe and Colossus, were instrumental in decoding enemy communications, modern AI technologies offer a broader range of capabilities. Today's AI can analyze real-time data, predict threats, and automate complex tasks, providing a strategic edge in both offensive and defensive military operations.

Ethical Concerns Then and Now

The codebreakers at Bletchley Park were acutely aware of the ethical implications of their work. They operated under strict secrecy, understanding that their breakthroughs could significantly impact the course of the war. The primary concern was the moral obligation to use their skills to save lives and end the conflict as swiftly as possible.

Similarly, today's AI developers are grappling with ethical dilemmas. The potential for AI misuse, particularly in generating disinformation or autonomous weapons, is a significant concern. OpenAI and other tech companies are implementing strict guidelines and ethical frameworks to mitigate these risks, but the rapid pace of AI advancement presents ongoing challenges.

Balancing Innovation and Responsibility

Both historical and modern technological advancements share a common theme: the need to balance innovation with ethical responsibility. The Enigma codebreakers focused on ensuring their work contributed to a just cause, while modern AI developers strive to ensure their technologies are used responsibly and ethically.

Final Thoughts

The Pentagon's integration of AI technologies from OpenAI and Microsoft marks a significant evolution in defense capabilities. Just as early computing technology helped win World War II, modern AI has the potential to transform national security, offering enhanced efficiency, strategic insights, and robust defense mechanisms. As AI continues to advance, it will be critical to balance innovation with ethical considerations to ensure these powerful tools are used responsibly.

Presented by Diana Wolf Torres, a freelance writer, navigating the frontier of human-AI collaboration.

Stay Curious. Stay Informed. #DeepLearningDaily

Additional Resources for Inquisitive Minds

Microsoft deploys GPT-4 large language model for Pentagon use in top secret cloud. John Harper. Defense Scoop. (May 7, 2024.)

Developing beneficial AGI safely and responsibly. OpenAI.

OpenAI Lifts Military Ban, Opens Doors to DOD for Cybersecurity Collab. Charles Lyons-Burt. Gov-Con Wire. (January 22, 2024. )

Defense Department: Pentagon’s Top AI Official Addresses ChatGPT’s Possible Benefits, Risks. Stew Maguson. National Defense. (March 8, 2023.)

Ethics and innovation belong hand in hand. The Alan Turing Institute. Professor Helen Margetts. Dr Cosmina Dorobantu. Josh Cowls. "Looking back to the 1940s, Alan Turing himself demonstrated that technological code and a code for ethics could be developed together – in fact, he had to develop them together."

War of Secrets: Cryptology in WWII. Cryptology is the study of secret codes. Being able to read encoded German and Japanese military and diplomatic communications was vitally important for victory in World War II, and it helped shorten the war considerably. National Museum of the United States Air Force.

Listen to "Deep Learning with the Wolf" on Spotify.

Follow @DeepLearningDaily on YouTube.

#AIEthics #DeepLearning #MachineLearning #TuringInstitute #AlanTuring #OpenAI #Microsoft #ChatGPT

Share this post