Title: Relational Recurrent Neural Networks

Authors: Adam Santoro*, Ryan Faulkner*, David Raposo*, Jack Rae, Mike Chrzanowski, Théophane Weber, Daan Wierstra, Oriol Vinyals, Razvan Pascanu, Timothy Lillicrap (*equal contribution)

Institution: DeepMind, University College London

Date: 2018

Links: NeurIPS

Why this paper still howls

Classic RNNs (even beefy LSTMs) can store information for long stretches—but they’re clumsy at reasoning about relations between those stored facts. RRNs bolt a “Relational Memory Core” (RMC) onto an RNN, letting each memory slot attend to every other slot (Transformer-style). The result? State-of-the-art scores on language modelling (WikiText-103), program evaluation, and a Pac-Man-style RL challenge—all with fewer parameters than brute-force scaling. It was an early proof that structured attention + recurrence beats sheer size for some reasoning tasks.

How it works

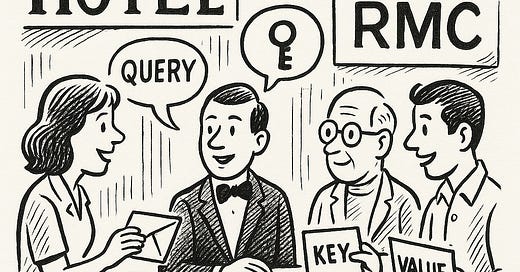

Picture your RNN’s hidden state as a hotel with many rooms (memory slots). In a vanilla RNN each guest scribbles notes but never talks to the neighbours. The RMC installs a lobby where, every timestep, guests exchange gossip via multi-head dot-product attention. Queries, keys, and values are projected from each slot, attention scores decide who listens to whom, and the blended “tea” is written back into every room. A lightweight gating step (à la LSTM) filters noise, and the updated matrix marches to the next timestep. Because slots can now compare notes explicitly, the network natively reasons about relationships over time rather than just memorising sequences.

Key takeaways for humans

Relational bias matters. By baking “objects + their relations” into memory, RRNs solve tasks that stump plain LSTMs.

Attention isn’t just for Transformers. You can retrofit it inside recurrent cores to get the best of both worlds: long contexts and efficient online processing.

Sample-efficient RL gains. In partially observed games (Mini-PacMan) RRNs learned better policies faster—handy for robotics or edge devices.

Blueprint for modern hybrids. Today’s memory-augmented agents (e.g., in embodied AI or autonomy) often trace lineage back to the RMC idea.

Note to readers

The accompanying 10-minute podcast is 100 % AI-generated in Google NotebookLM. This free tool is extraordinary useful for breaking down information in a variety of formats including mind maps, FAQs, study guides, or a conversational “audio overview” (which is what I use for these daily podcasts.)

#WolfReadsAI #RelationalMemory #DeepLearning #NeurIPS #RNN #AttentionMechanism #AIResearch #MachineLearning #GoogleNotebook #DeepLearningwiththeWolf #30DaysofAIPapers #AdamSantoro #RyanFaulkner #DavidRaposo #RelationalRecurrentNeuralNetworks #DeepMind #GoogleDeepMind #Google

Share this post