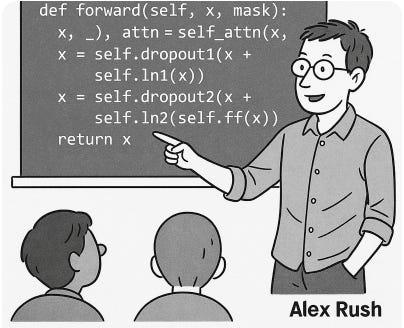

📚 Paper: The Annotated Transformer (Harvard NLP)

✍️ Author: Alexander Rush

🏛️ Institution: Harvard NLP

📆 Date: 2018

What This Paper Is About

Strictly speaking, this isn’t a “paper.” It’s a blog post—a tutorial. But don’t let that fool you. The Annotated Transformer quietly shaped the trajectory of modern AI.

After the 2017 release of “Attention Is All You Need,” a generation of readers stared at the equations and nodded solemnly. Few really understood it. Then, in 2018, Harvard NLP dropped this beautifully written, line-by-line annotated PyTorch implementation. And just like that, it clicked.

This post walked you through the Transformer model like a thoughtful TA with infinite patience. Every equation got a paragraph. Every architectural choice got a diagram. Every function had PyTorch code you could run yourself.

It was open source. It was free. It was friendly.

And it worked.

Why It Still Matters

Because the Transformer became the DNA of nearly every large language model, this blog post became required reading. It demystified the machinery of modern AI for:

Engineers and researchers trying to build their own models

Students learning how attention works in practice

Tinkerers who wanted to see what the fuss was about

Entire ML bootcamps who adopted it as a de facto textbook

It’s hard to overstate how many people got their start with Transformers not by reading Vaswani et al., but by reading this

.

How It Works

The Annotated Transformer walks you through the full architecture with five superpowers:

Clear prose

Simple equations

Clean PyTorch code

Live visualizations

No assumptions about your math level

By the time you’re done, you haven’t just read about the Transformer—you’ve built one yourself.

It wasn’t flashy. It wasn’t monetized. But it was one of the best educational resources ever written about modern deep learning.

Read the original blog post here.

Podcast Note

🎙️ Today’s podcast was generated by AI using Google NotebookLM.

Memorable Quote

“In this post I present an ‘annotated’ version of the Transformer model from the paper ‘Attention is All You Need.’ I have tried to make it as clear and friendly as possible.”

Mission accomplished, Alex.

Editor’s Note

This was the first post that made me feel like I could build a Transformer. Not just understand one—but actually code one line-by-line. In a sea of “too hard, too mathy” papers, this was the lifeboat. And we’re still floating on it.

Additional Resources:

Read more from Alexander Rush, Associate Professor, Cornell. https://rush-nlp.com/

Coming Tomorrow

🧠 The First Law of Complexodynamics — A philosophical banger about complexity, order, and the entropy of intelligence. This one’s got ideas. Big ones.

#Transformers #AttentionIsAllYouNeed #PyTorch #HarvardNLP #AnnotatedTransformer #WolfReadsAI #DeepLearning #AIEducation #AlexRush

Share this post