The Castle, The Hive, and The Algorithm: A Personal Lesson in Ensemble Methods

How a Childhood Mistake Explained Modern AI Architecture

When I was very young, we lived in a giant stone estate that I called “the castle.” No one else called it that, but with gargoyles over the front door, my young mind was convinced.

Situated just outside Sleepy Hollow, the place had a proper name like an English estate. We didn’t own it, of course; my parents were the caretakers. We did, however, live next door to a Rockefeller. Granted, it was likely a lesser Rockefeller, but a Rockefeller all the same.

The thing about estate life is the isolation. We had exactly one set of neighbors on the property, living in a cottage that was likely the old servants’ quarters. Kids don’t analyze social hierarchies; I just knew their house was cozy compared to the castle. Since there weren’t many choices for playmates, these kids were basically it. I didn’t realize until it was too late that those kids were pure trouble.

Release the Swarm! The youngest kid was my age, and one day we had a stroke of genius: let’s throw glass bottles. The estate was old and full of junk, so finding ammunition was easy. The plan was simple. Throw bottle. Watch it shatter. Kid logic. Then we hit the hive. It did not end well.

That was my introduction to swarm intelligence—and my introduction to the emergency room. But I survived.

And years later, looking at machine learning models, I remembered that afternoon. I learned that a single bee is just a bug. But a swarm with a shared target? That is a force of nature. (I was fast, but some of those bees were faster.) Which brings us perfectly to the topic of the day.

What Is Swarm Intelligence?

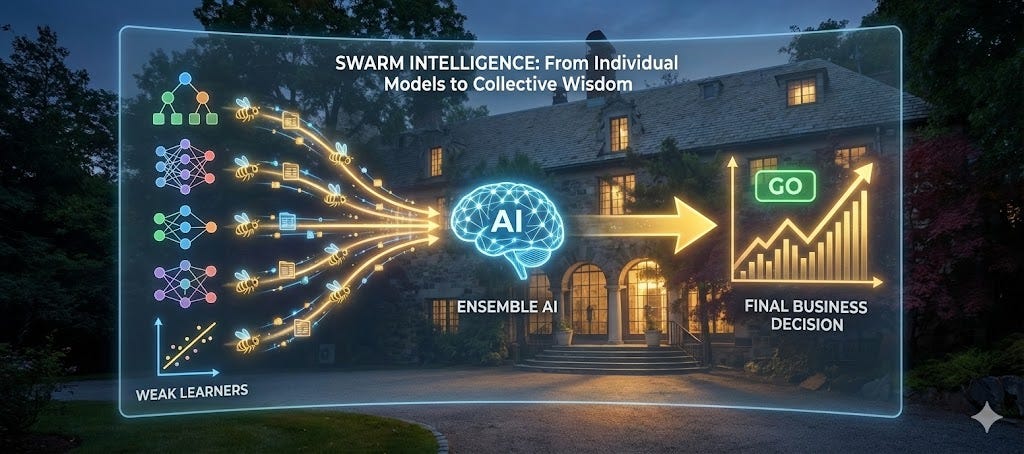

Swarm intelligence is the emergence of complex behavior from simple rules. A single bee can’t do much. But thousands of bees? They can solve complex logistical problems that would baffle a supercomputer. Crucially, the hive has no “Bee CEO.” There is no top-down management. It is purely decentralized decision-making. Thousands of imperfect guesses combine to form one perfect decision.

The Democratic Hive: When a colony needs to move, scouts fly out to vet locations. They return and share their findings through the Waggle Dance, a movement that encodes the coordinates and quality of the real estate. It works like a voting system. The better the site, the more enthusiastic the dance. Enthusiastic dances attract more scouts. More scouts lead to more votes. Once a site hits a specific threshold of votes, the debate ends, and the swarm acts. whether that means moving to a tree or digging into the ground. No single bee sees the full picture, but the system never misses. AI ensemble methods work the exact same way.

From Bees to Algorithms: The transition from biology to technology is seamless. Instead of one model, you use many. Each explores the data from a different perspective, mimicking the scout bees.

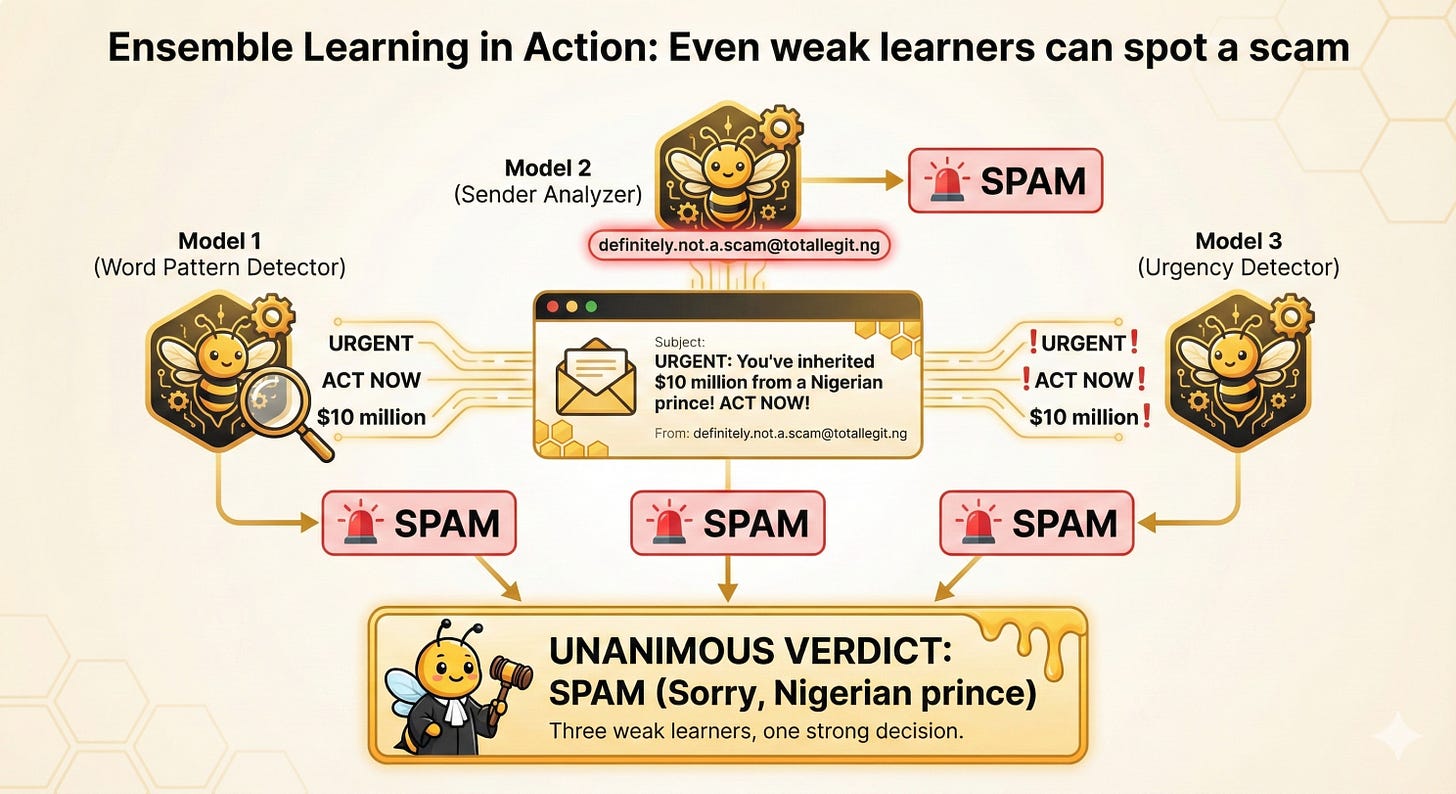

Imagine a spam filter powered by three distinct models. One looks at word patterns, one analyzes the sender, and one checks for urgency signals. Alone, they make mistakes. Together, they are rigorous. If two out of three say “Spam,” the email goes to the junk folder. The majority rules. That is ensemble learning: taking many weak guesses and turning them into one high-confidence answer.

The Hive Mind in Action: You interact with these “digital swarms” every day:

Netflix recommendations: Multiple models voting on your taste profile.

Credit scoring: Lenders combining risk models to get a precise picture of your finances.

Voice assistants: Siri and Alexa using ensembles to decode accents and intent.

Medical AI: Doctors using consensus models to verify diagnoses.

In the real world, no single model is perfect. But a well-designed swarm rarely fails.

Final Thoughts

Every time I work with ensemble methods, I think about those bees streaming out of the ground.

No single bee decided to attack. But together, they made an excellent decision. (For them. Less excellent for me.)

That’s the power of distributed intelligence. Whether you’re a hive defending territory or an AI system filtering spam, the principle is identical: many perspectives, one strong answer.

The swarm rarely gets it wrong.

The 30-Second Glossary

Ensemble Methods: The “Council of Experts.” Combining several average models to create one genius predictor.

Weak Learner: A model that is just okay on its own. (Think: a single, slightly confused intern).

Bagging: “Parallel Voting.” Training models at the same time and averaging their answers.

Boosting: “Sequential Fixing.” Training models one by one, where each new model fixes the last one’s mistakes.

Swarm Intelligence: When a group of simple agents creates complex, smart behavior. No CEO required.

FAQ

Is an ensemble always better? For accuracy? Almost always. For speed? No. It takes more computing power to run a swarm.

Is this just “overfitting” with extra steps? Actually, it’s the opposite. Ensembles smooth out the weird quirks of individual models, making them more reliable, not less.

Do bees actually do this? 100%. They are nature’s original decision engines. We’re just copying their homework.

Enjoyed this? Please forward it to someone who enjoys increasing their AI knowledge and being entertained at the same time.

#artificialintelligence #ensemblemethods #machinelearning #swarmintelligence #bees #deeplearningwiththewolf #dianawolftorres #randomforest #neuralnetworks

The bee story nails it. Ensemble methods feel way more intuitive when you think about how each weak learner is like a scout bringing back their own take on the problem. The part about voting thresholds is key, too many people miss that you need enough consensus before acting. Super tight connection between biological systems and modern ML.

Excellent article! Are you going to expand on the application ensemble method in detail concerning Gen AI?