Peeling Back Nano Banana

The Banana With Bite

Why It’s Called Nano Banana

Before it was a headline, it was just a codename on a leaderboard: Nano Banana. Buried in LMArena’s Image Edit Arena, the anonymous model quietly outperformed the competition. When Google revealed it as Gemini 2.5 Flash Image, the mystery peeled away—but the nickname didn’t. The AI world kept the banana, because sometimes the quirkiest names mark the biggest shifts.

Editing Is the New Magic Trick

For years, AI image models were measured by how imaginative they could get. Could they paint wild fantasy worlds? Could they invent photorealistic faces? Nano Banana flips the script: it’s an editing-first model. And that matters more than you might think — not least because I can finally cross “learn Photoshop” off my to-do list.

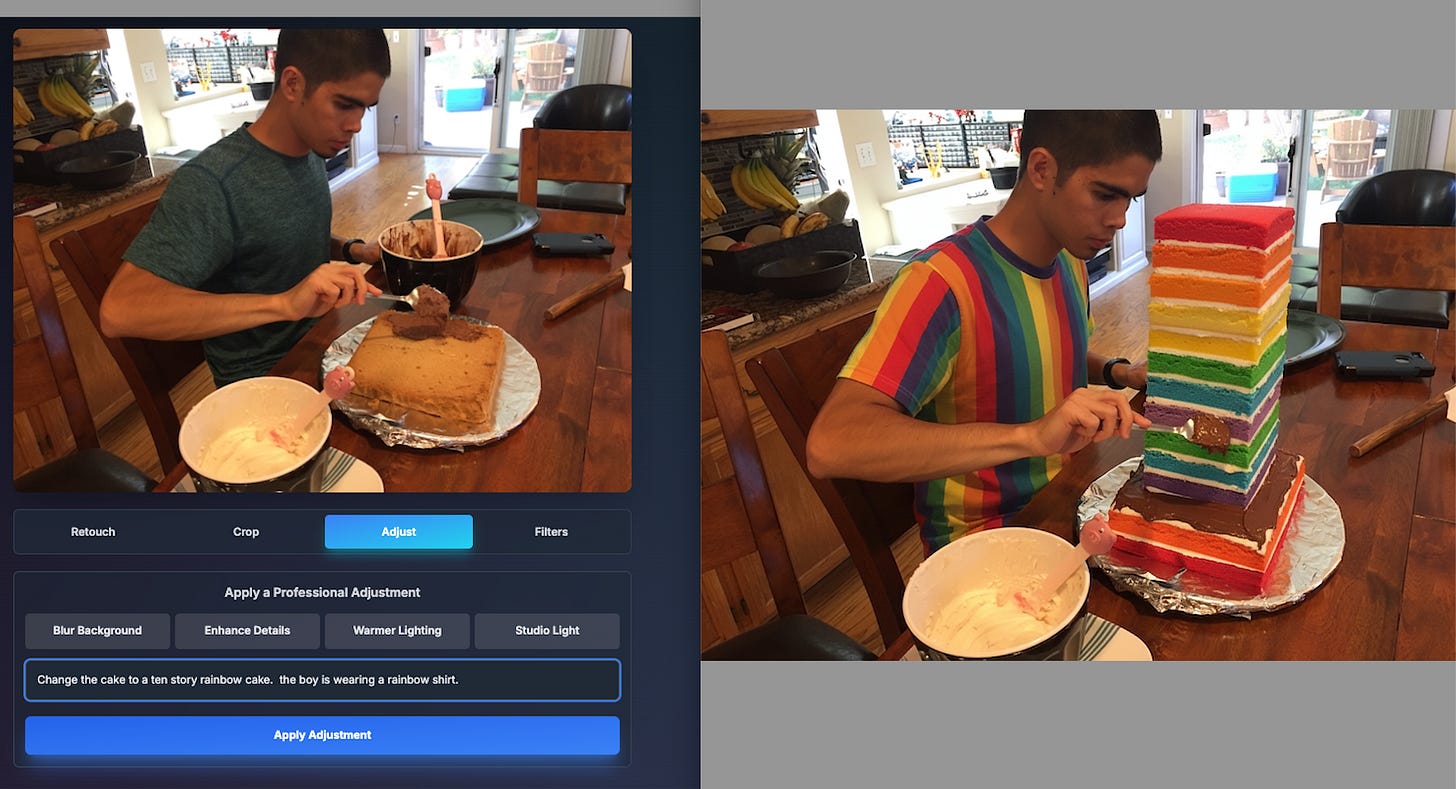

Prompt: “Change the cake to a ten-story rainbow cake, the boy is wearing a rainbow shirt.”

How Nano Banana Works

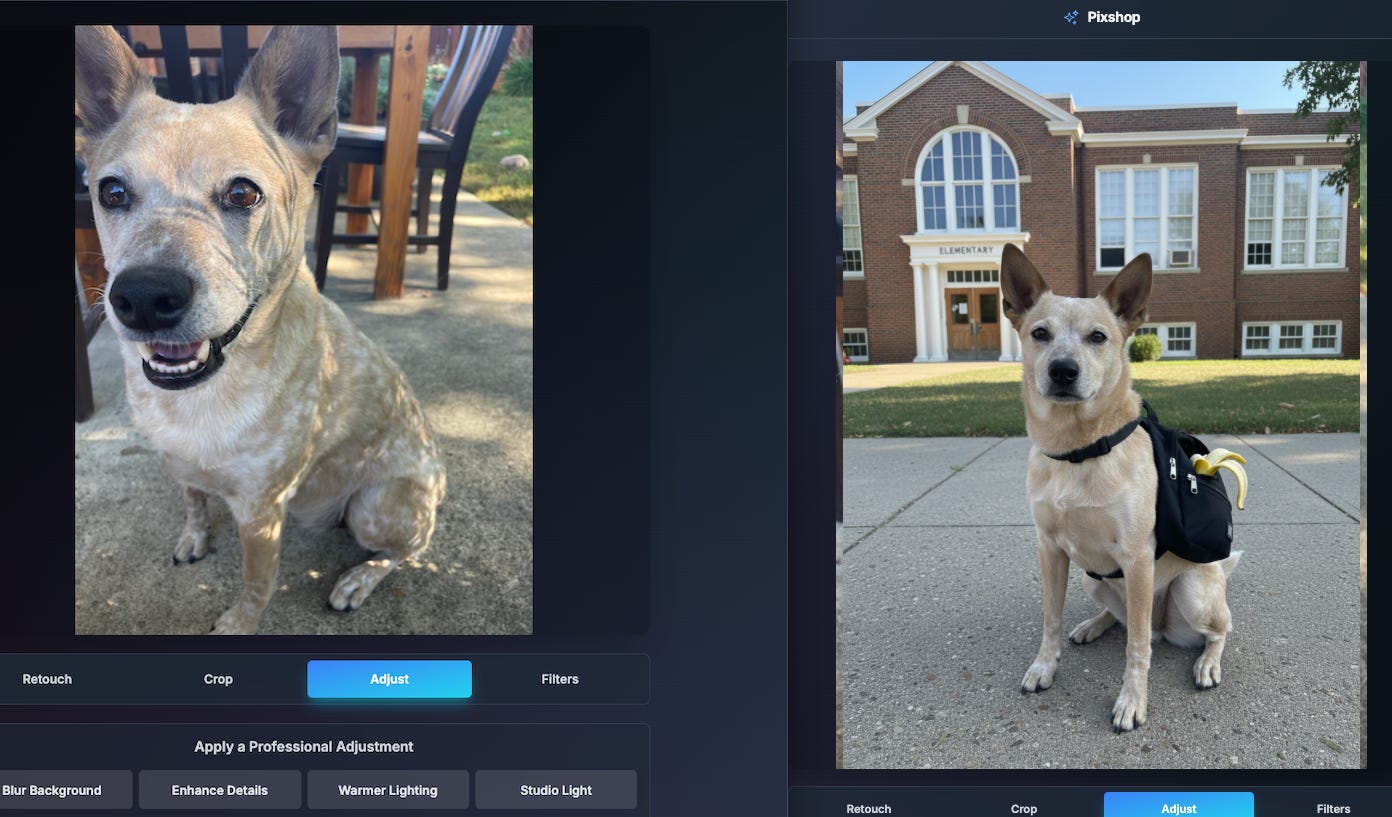

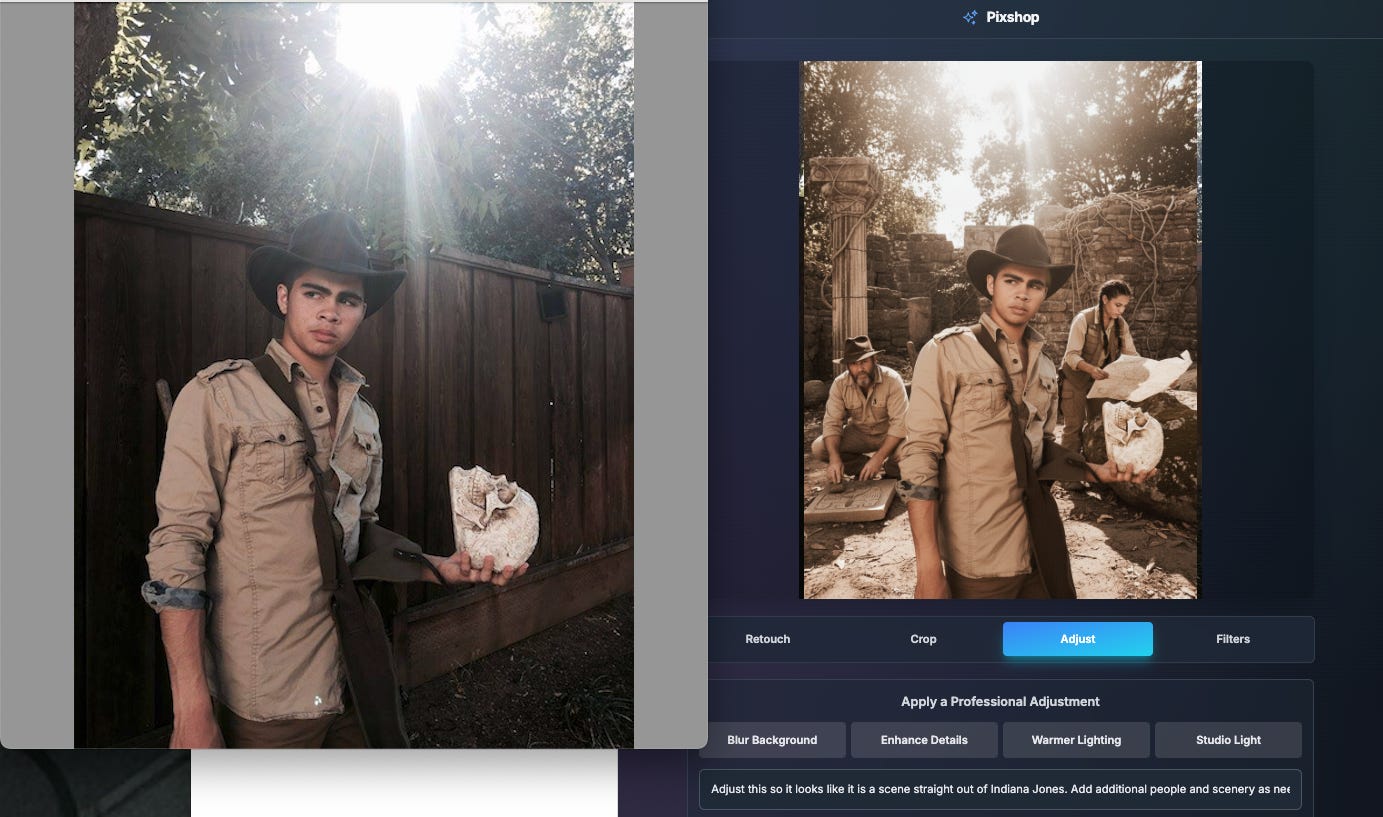

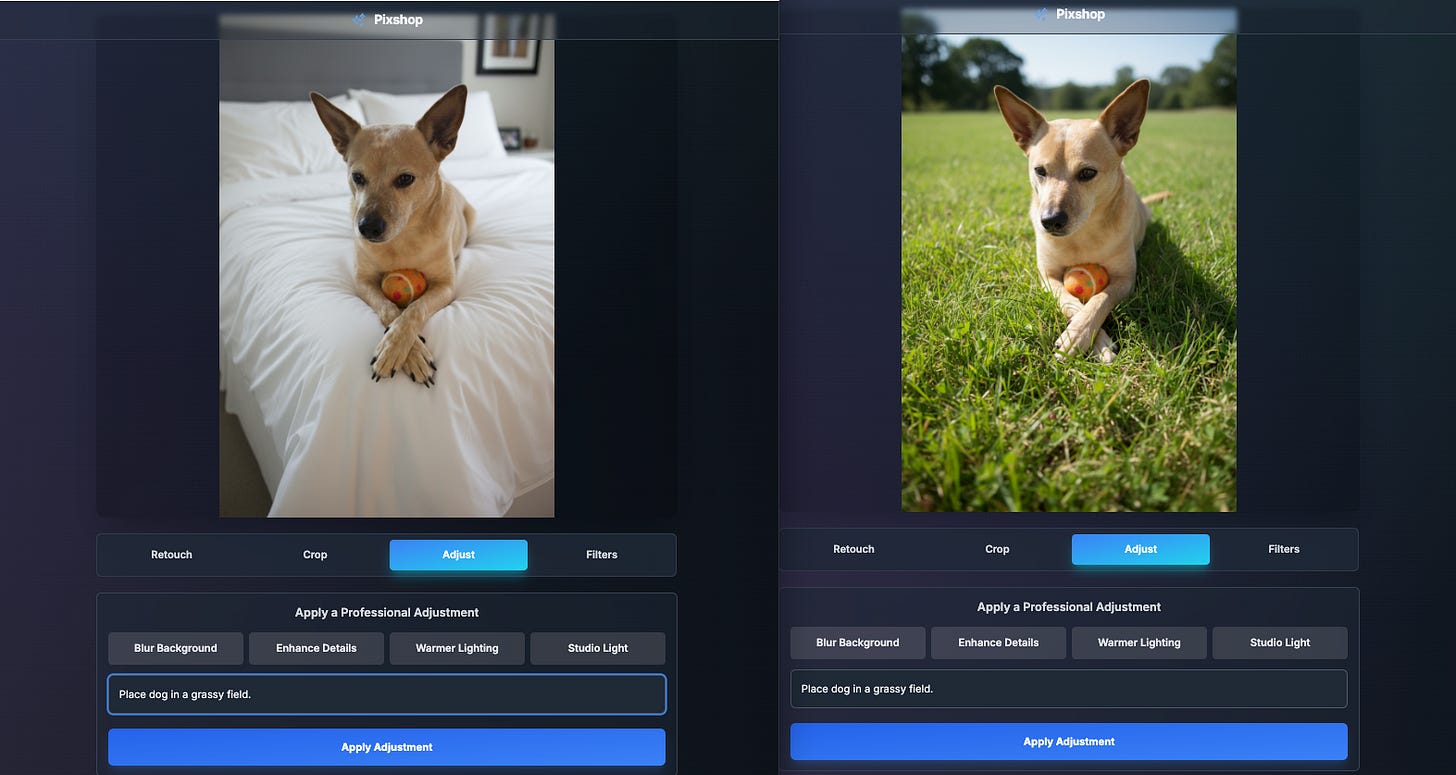

At its core, Nano Banana is designed for localized image edits. Instead of reinventing the entire picture, it respects what’s already there — perspective, textures, lighting — and modifies only what you ask it to.

Upload an image. Write a prompt. Watch the edit appear. That’s the workflow.

Examples:

“Remove the red cone in the lower right corner. Keep everything else unchanged.”

“Replace the phone in the person’s left hand with a coffee cup. Match grip, shadows, and angle.”

“Change the view to a side angle. Keep poses and expressions consistent.”

“Place the dog in front of a school building. Add a backpack with a banana sticking out.”

The brilliance isn’t in novelty — it’s in control. Nano Banana doesn’t force you to start from a blank canvas. It lets you direct existing visuals with surgical accuracy

Why It’s Different

Other models like DALL·E or Stable Diffusion are generators: they excel at creating from scratch. Nano Banana is an editor. That distinction may sound subtle, but it shifts how we use AI entirely.

Think of it this way:

Generators are painters with a blank canvas.

Nano Banana is Photoshop that takes voice commands.

This isn’t just convenience. It opens new workflows: refining assets instead of redoing them, improving existing work instead of starting over, and building visual consistency across projects.

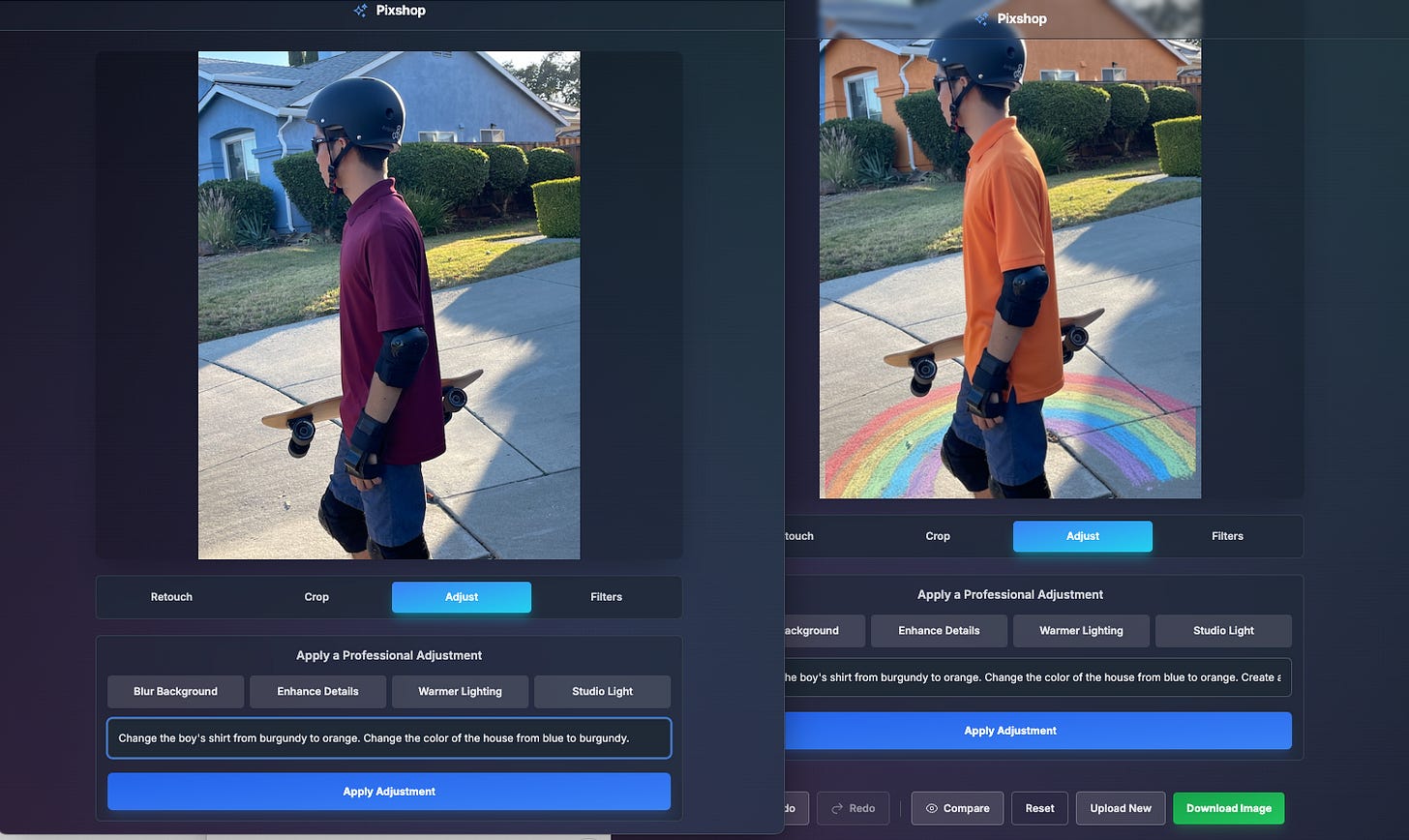

Prompt: “Make the boy’s shirt orange, the house orange. Add rainbow chalk drawing to driveway.”

Why It Works So Well

What makes Nano Banana stand out is its ability to preserve context. It maintains:

Lighting: Shadows fall in the right place.

Perspective: Objects stay aligned.

Texture: Fabric still looks like fabric; wood still looks like wood.

That realism comes from training on large numbers of “before and after” images — essentially teaching the model what a believable edit should look like. The result: edits that don’t scream “AI.”

Prompt: “Adjust this so it looks like a scene straight out of Indiana Jones. Add additional people and scenery as needed.”

Why It’s Important

Nano Banana’s editing-first approach matters because it democratizes precision.

For students: it can clarify diagrams or brighten lab photos — provided they use it ethically (to communicate results, not fabricate them).

For designers: it reduces time and cost by transforming one asset into dozens of variations.

For teachers, marketers, and small businesses: it puts pro-level editing in reach without pro-level tools.

It’s a shift toward accessible precision editing at scale.

It’s all just fun. And, right now, it’s free to use.

Prompt: Place dog in a grassy field.

Where You Can Use It

Nano Banana is already embedded in multiple platforms:

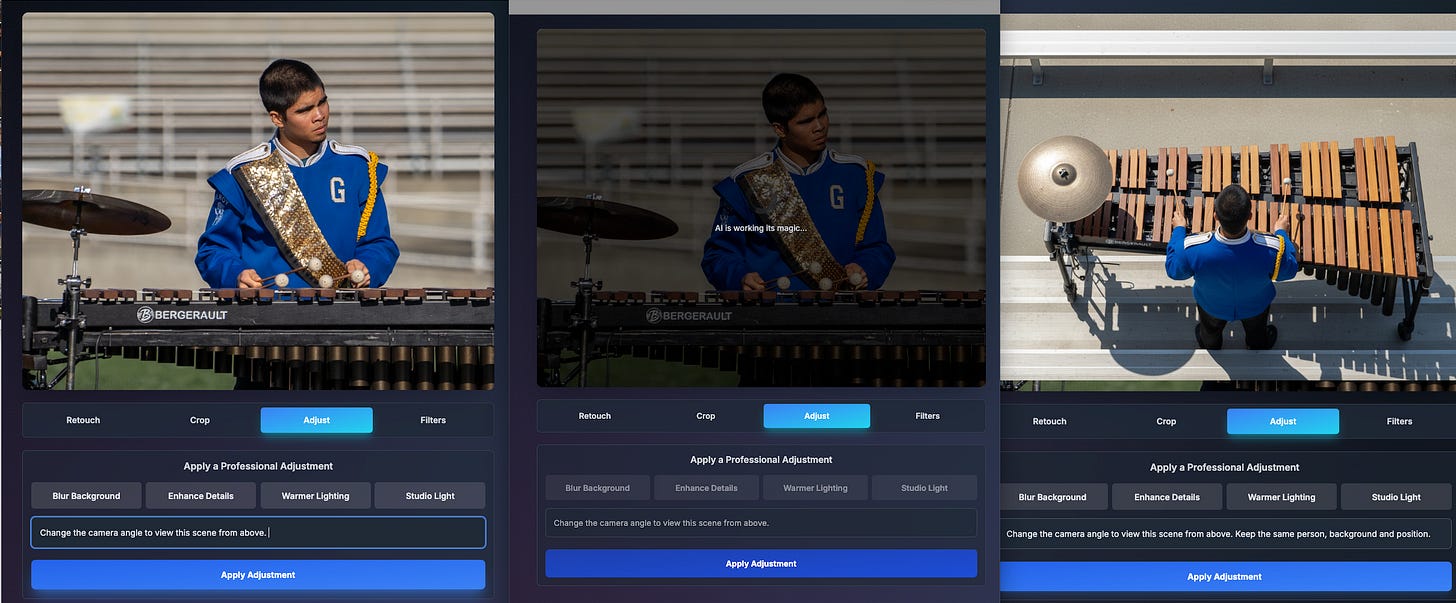

Google AI Studio → the most direct way to experiment. Originally a developer sandbox, but now accessible to anyone — no coding required. The screencaps I’ve provided in this article are from Google AI Studio. Link: aistudio.google.com

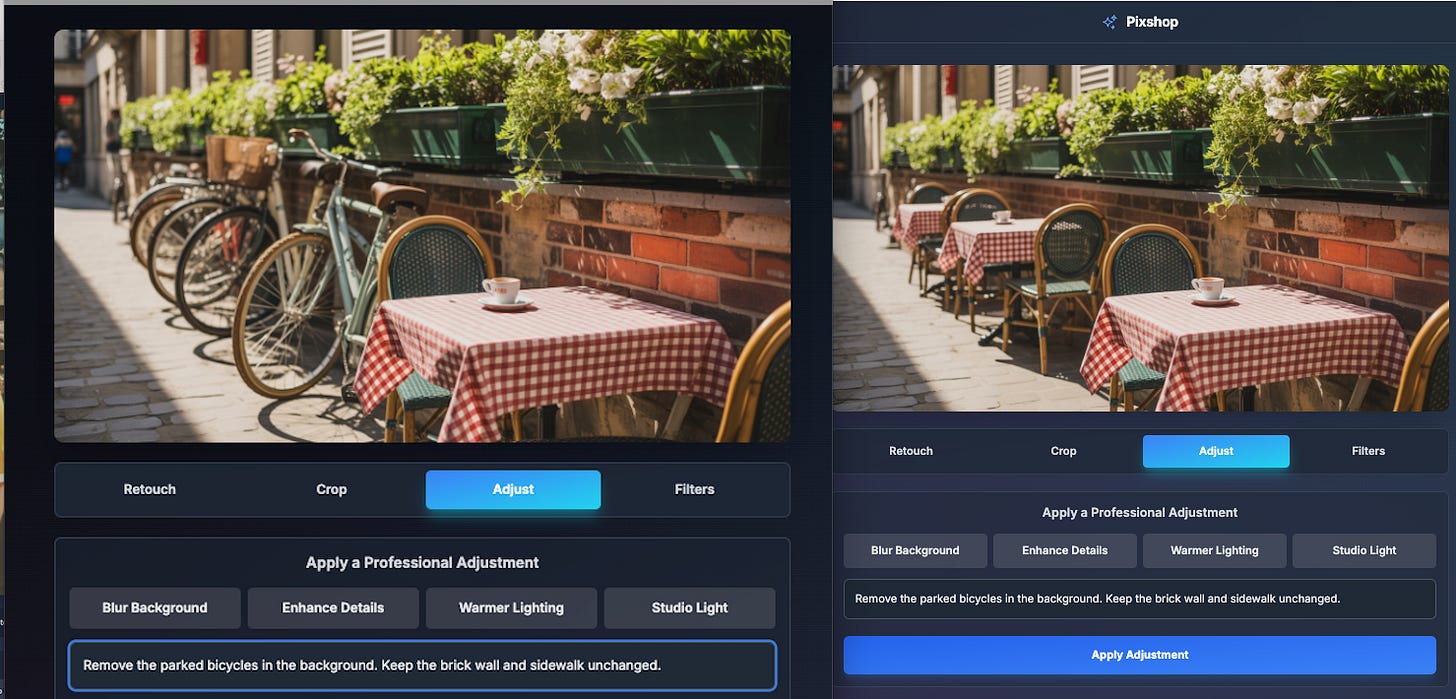

I’ve been using one of the Apps bundled inside of AI Studio, entitled Pixshop, as it just facilitates the process of image editing. But, there are many places you can use the editor. Find the one that feels comfortable to your editing style.

Gemini (Google’s chatbot) → upload an image in chat and request edits.

Creative platforms → Freepik, Crea, and Higsfield have built Nano Banana into their workflows.

Adobe Firefly → some Firefly editing features quietly tap Nano Banana under the hood.

👉 Rule of thumb: If you want precise control, use AI Studio. If you want playful experimentation, Gemini works fine.

Prompt Pack:

To showcase Nano Banana’s strengths, here are some sample prompts:

Object Removal: “Remove the parked bicycles in the background. Keep the brick wall and sidewalk unchanged.”

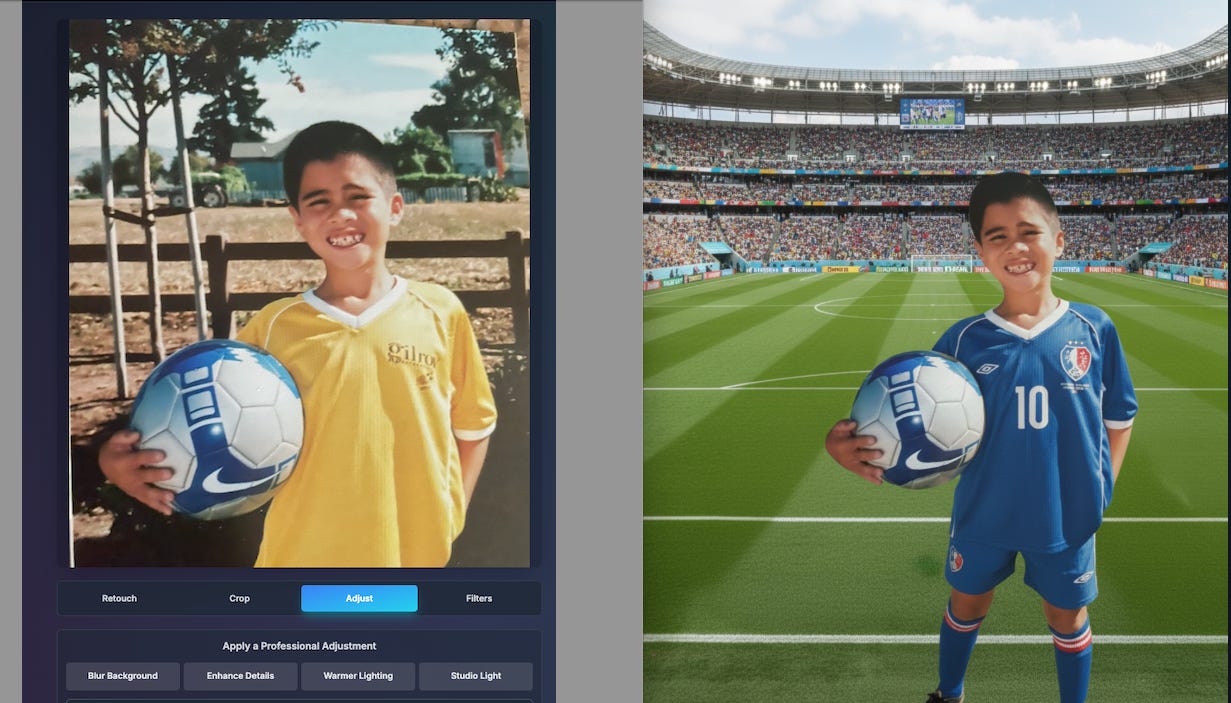

Background Replacement: “Change the background to the World Cup. Match lighting. Make child look like a member of the World Cup soccer team.”

Color Change: “Change the red sneakers in the front row to neon green. Preserve laces and texture.”

Partial Style Transfer: “Transform only the sandwich on the plate into a watercolor painting style. Keep background realistic.”

Time Shift: “Make this classroom look like the 1980s. Change computers to box monitors and adjust clothing styles.”

SynthID

Google has embedded SynthID into Nano Banana, its invisible watermarking system that tags every AI-generated or AI-edited image at the pixel level. Unlike a visible logo or text mark, SynthID can’t be cropped out or erased with simple editing. It works by subtly altering pixel patterns in ways imperceptible to the human eye but detectable with Google’s verification tools. For business users, this offers a practical safeguard: marketing teams, publishers, and regulators can confirm whether an image was AI-assisted without compromising design quality. While not unbreakable, SynthID represents a significant step toward traceability and accountability in AI-driven media, a capability that will only grow more important as AI content becomes indistinguishable from the real thing.

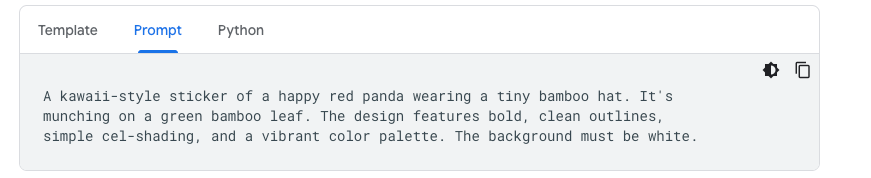

Google’s Official Prompt Guide

Google has released its official prompt guide! It’s nestled in the Google AI for Developers space, but accessible to everyone.

Image generation with Gemini (aka Nano Banana)

Tell A Story

One of the most important lessons from Google’s prompt guide is this: don’t just throw a handful of keywords at the model and hope for the best. Nano Banana is built to understand language deeply, which means it responds far better to full, descriptive sentences. Instead of typing “banana, spaceship, galaxy,” you’ll get richer results if you describe the scene like you’re telling a story: “A tiny glowing banana spaceship drifting through a galaxy of purple nebulae, lit by starlight.” That narrative framing gives the model context, mood, and atmosphere to work with—and the difference in quality can be stunning.

Be A Director

Another secret? Think like a photographer or a director. If you want a photorealistic portrait, borrow words from the world of cameras—“wide-angle shot,” “softbox lighting,” “shallow depth of field.” If you’re after a comic-book effect, describe the art style and panel layout. The guide emphasizes that Gemini’s strength lies in translating those precise visual cues into accurate, polished images. In other words, the more you shape your request like a creative brief, the closer the output will feel to professional artwork.

Try Something New

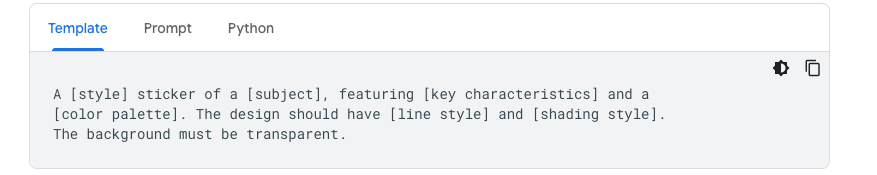

To create stickers, icons, or assets, be explicit about the style and request a transparent background.

Iterate (It’s okay to get it wrong.)

Finally, don’t expect perfection on the first try. Nano Banana is designed for iteration—it’s like having a conversation with an endlessly patient illustrator. You can start broad, then refine step by step: “Great, but make the colors warmer.” “Keep the same background, but change the character’s expression to a smile.” This conversational loop is where Gemini really shines, turning rough ideas into refined, ready-to-share visuals. Think of it less as typing prompts into a box and more as a creative partnership—one where your words guide the brushstrokes. The results I shared in this article took me multiple tries. Each try (each brushstroke) brought me closer to to the result I wanted.

Final Thoughts

Nano Banana is playful by name, serious by design. It’s one of the first widely deployed models to pair powerful editing with watermarking safeguards. That balance of creativity and accountability may define the next era of AI adoption.

Listen to a podcast version of this article.

#nanobanana #maawolfe #mreflow #geminiflash #imagediting #promptengineering #ethics #ai #GenerativeAI #deeplearningwiththewolf #dianawolftorres #ContentAuthenticity #Watermarking #Design

#MattrWolfe did a great job in this video explaining how to use Nano Banana.

Note: His preferred place to use NanoBanana is within Google’s AI Studio. (This is also how I use Nano Banana. )

Pro Tip: Matt’s #FutureTools newsletter and website (futuretools.io) are also excellent tools for those who enjoy digging deeper into more advanced AI tools.