Physical AI: The Next Evolution in Machine Intelligence

How Machines Are Learning to Master the Physical World

At CES 2025, Nvidia CEO Jensen Huang made a statement that captured the attention of the technology world: "The ChatGPT moment for robotics is coming." This wasn't mere hyperbole - it signaled a fundamental shift in how artificial intelligence will interact with our physical world. While ChatGPT revolutionized our interaction with digital information, Physical AI promises to transform how machines understand and manipulate the physical environment around us.

Physical AI isn’t necessarily an intuitive concept so I made a quick video to explain the basics, and then the rest of this article gets deeper into the technical parts of it.

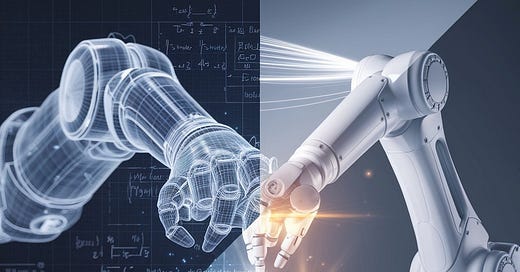

The Architecture of Physical AI

Physical AI represents a sophisticated integration of multiple technological systems that work in concert to bridge the digital-physical divide. At its core, Physical AI combines advanced sensor networks, real-time processing systems, and precision actuators, all orchestrated by sophisticated AI models that understand physical dynamics.

The system architecture consists of three primary layers:

The Perception Layer processes multi-modal sensory input through advanced sensor fusion algorithms. Unlike traditional computer vision or sensor systems that operate in isolation, Physical AI integrates data from multiple sources - visual, tactile, proprioceptive, and environmental sensors - to create a comprehensive understanding of the physical space.

The Cognitive Layer serves as the system's decision-making core, employing sophisticated AI models trained in physics-based simulation environments. These models don't just process data; they understand physical principles like mass, force, friction, and fluid dynamics. This understanding enables them to predict how objects will behave and how their actions will affect the physical world.

The Action Layer translates cognitive decisions into precise physical movements through advanced robotics and control systems. This layer must account for real-world physics constraints while maintaining the accuracy and adaptability required for complex tasks.

The Learning Process: From Virtual to Physical

What truly sets Physical AI apart is its sophisticated learning methodology. Unlike traditional robotics systems that rely on pre-programmed routines, Physical AI employs a hybrid learning approach that combines physics-based simulation with real-world adaptation.

The process begins in highly detailed virtual environments created using advanced physics engines. These simulations go far beyond simple 3D modeling - they incorporate complex physical properties, material characteristics, and environmental variables. In these virtual spaces, AI models can:

Develop and test complex behavioral strategies without physical constraints

Learn from millions of iterations of trial and error in compressed time

Encounter and adapt to edge cases that might be too dangerous or costly to replicate in reality

Build a deep understanding of physical causality and consequence

The transition from virtual to physical environments represents one of the most sophisticated aspects of Physical AI. The system must bridge what's known as the "reality gap" - the inevitable differences between simulation and reality. This is achieved through advanced domain adaptation algorithms that allow the AI to adjust its learned behaviors to real-world conditions while maintaining performance reliability.

Real-World Applications and Business Impact

The business implications of Physical AI extend far beyond traditional automation. In manufacturing, Physical AI systems can adapt to supply chain variations and product customization demands in real-time, fundamentally changing the economics of flexible production. In healthcare, AI-powered surgical systems can combine the precision of machines with the adaptability traditionally associated with human surgeons.

The technology's impact on operational efficiency is particularly noteworthy. Physical AI systems can:

- Optimize complex processes by understanding and adapting to physical constraints in real-time

- Reduce waste and energy consumption through precise control and prediction

- Enable new levels of customization and flexibility in manufacturing

- Decrease downtime through predictive maintenance based on physical understanding of wear and tear

Looking Forward

As we approach what Huang calls the "ChatGPT moment for robotics," businesses need to understand that Physical AI isn't just another incremental advance in automation technology. It represents a fundamental shift in how machines interact with and understand our physical world. The implications for business operations, product development, and service delivery will be profound, potentially reshaping entire industries just as digital transformation did in previous decades.

Key Vocabulary

Physical AI: A sophisticated integration of artificial intelligence with physical systems that enables machines to perceive, understand, and interact with the real world in real-time.

Digital Twin: A virtual representation of a physical object, process, or system that serves as a real-time digital counterpart for simulation and testing purposes.

Sensor Fusion: The process of combining data from multiple sensors to create a more comprehensive and accurate understanding of the environment than would be possible using a single sensor.

Physics-Based Learning: An AI training approach where systems learn by understanding and working with real-world physical principles rather than just processing data patterns.

Reality Gap: The difference between simulated and real-world conditions that Physical AI systems must adapt to when transitioning from virtual training to physical deployment.

Adaptive Learning: The ability of Physical AI systems to continuously modify their behavior based on real-world experience and feedback.

Frequently Asked Questions

How is Physical AI different from regular robotics? Unlike traditional robotics that rely on pre-programmed routines, Physical AI systems can learn, adapt, and make decisions based on real-world physics and environmental interactions. They combine sophisticated AI algorithms with physical capabilities, enabling them to handle unpredictable situations.

Does Physical AI require special hardware? Physical AI systems typically use standard robotics hardware but require sophisticated sensor arrays and processing systems to handle real-time physics calculations and decision-making. The key difference lies in the software and learning systems rather than specialized hardware.

How long does it take to train a Physical AI system? Training time varies significantly based on the complexity of tasks. While initial virtual training can be accelerated through simulation, real-world adaptation requires actual physical interaction time. Systems continue learning and improving through operation.

What are the main challenges in implementing Physical AI? Key challenges include bridging the reality gap between simulation and physical environments, ensuring safety during learning processes, managing the computational demands of real-time physics processing, and integrating multiple sensor inputs effectively.

Is Physical AI ready for widespread industrial use? While certain applications are already in use, particularly in controlled industrial environments, the technology is still evolving. Early adopters are seeing success in manufacturing, healthcare, and logistics applications.

#PhysicalAI #ArtificialIntelligence #Robotics#IndustryAI #Nvidia #JensenHuang #deeplearning #deeplearningwiththewolf